20/09/22

Master Web3 Fundamentals: From Node To Network

Category

Written by

Read time

35 min

Web3 is vast and complex, combining various components, technologies, and concepts. No matter if you are new to Web3, blockchain and crypto or you are a veteran, with this piece you will get a high-level overview of the various components that power Web3, enabling you to understand the purpose and benefits of each component. Specifically, this series aims to:

- Provide an overview of fundamental Web3 components

- Evaluate the purpose of the various components

We define Web3 as the next iteration of the internet, combining what we’ve come to love from today’s internet with verifiable digital ownership, open systems, transparency and immutability. Web3, blockchain and crypto are three closely related topics, but are considered here as three separate terms:

- Blockchain: a technological innovation that enables verifiable digital ownership, transparency and immutability

- Crypto: short for cryptocurrency, describes cryptographically-secured tokens on a blockchain network

- Web3: encompasses blockchain, crypto, and all ecosystems and innovations built on top of them

To understand Web3, we must first understand the underlying blockchain and crypto technologies. While Web3 is still a relatively young concept with Bitcoin having launched only in 2009, the industry is growing rapidly with new technological innovations entering the market at insane speeds.

I hope that this series helps you navigate Web3 and can help you identify an area of interest that you can research on your own beyond this series. Dozens of links for additional material diving deeper into every topic can be found scattered throughout to help guide you to helpful content.

This series is divided into three pieces, with this piece covering all you need to from Web3 node infrastructure to how layer1 blockchain networks work. The next piece will layer2, interoperability and the vast dApp ecosystems built on the primitives outlines in this piece. Finally, the last piece will cover the off-chain environment and on/off-chain communication.

Content

- Web3 Infrastructure Overview

- On-chain ecosystem

- The Node Layer

- The Network Layer

- Closing

Web3 Infrastructure Overview

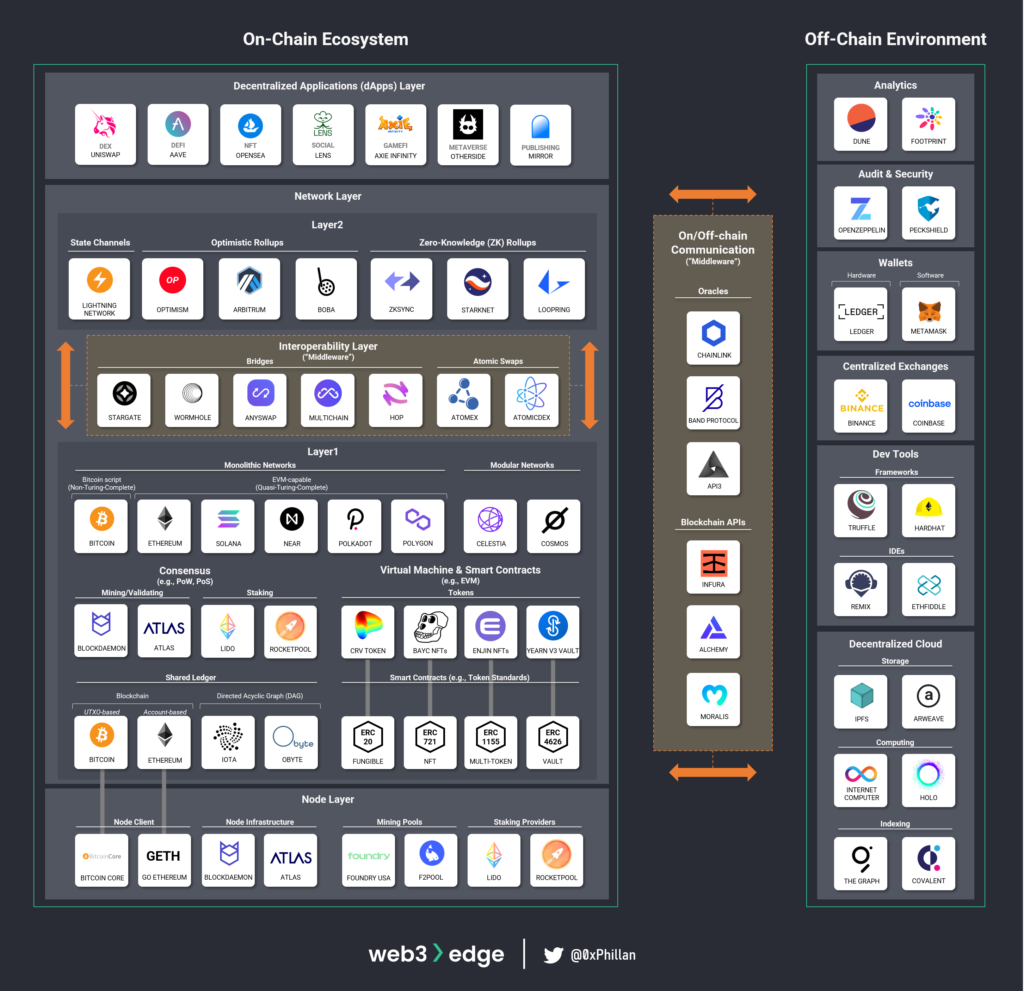

We categorize the Web3 infrastructure into multiple sections reflecting the on-chain ecosystems, the off-chain environment that supports the on-chain ecosystems and middleware that connects decentralized networks with each other and allows these to connect with the off-chain environment.

- On-chain ecosystem

- Node layer: Mining/validating nodes, node client software, mining/staking pools

- Network layer:

- Layer 1 networks: monolithic networks, modular networks, consensus (PoW, PoS), shared ledger technology, Virtual Machines & EVM-compatibility, smart contracts and ERC token standards

- Layer 2 networks: lightning network, optimistic roll-ups, zero-knowledge rollups

- Decentralized applications (dApp) layer

- Off-chain Environment: Analytics, audit & security, wallets, centralized exchanges (CEXs), developer tools (frameworks, IDEs), decentralized cloud (storage, computing, indexing)

- Interoperability layer (“middleware”):

- Network interoperability: bridges, atomic swaps

- On/Off-chain Communication Tools: Blockchain APIs, Oracles

On-chain ecosystem

The on-chain ecosystem is split into three primary layers:

- the decentralized applications (dApp) layer

- the network layer

- the node layer

In combination, these three layers enable the smart-contract-driven ecosystems and applications that Web3 is best known for. We look at the on-chain ecosystem from the node layer and work ourselves up to the dApp layer.

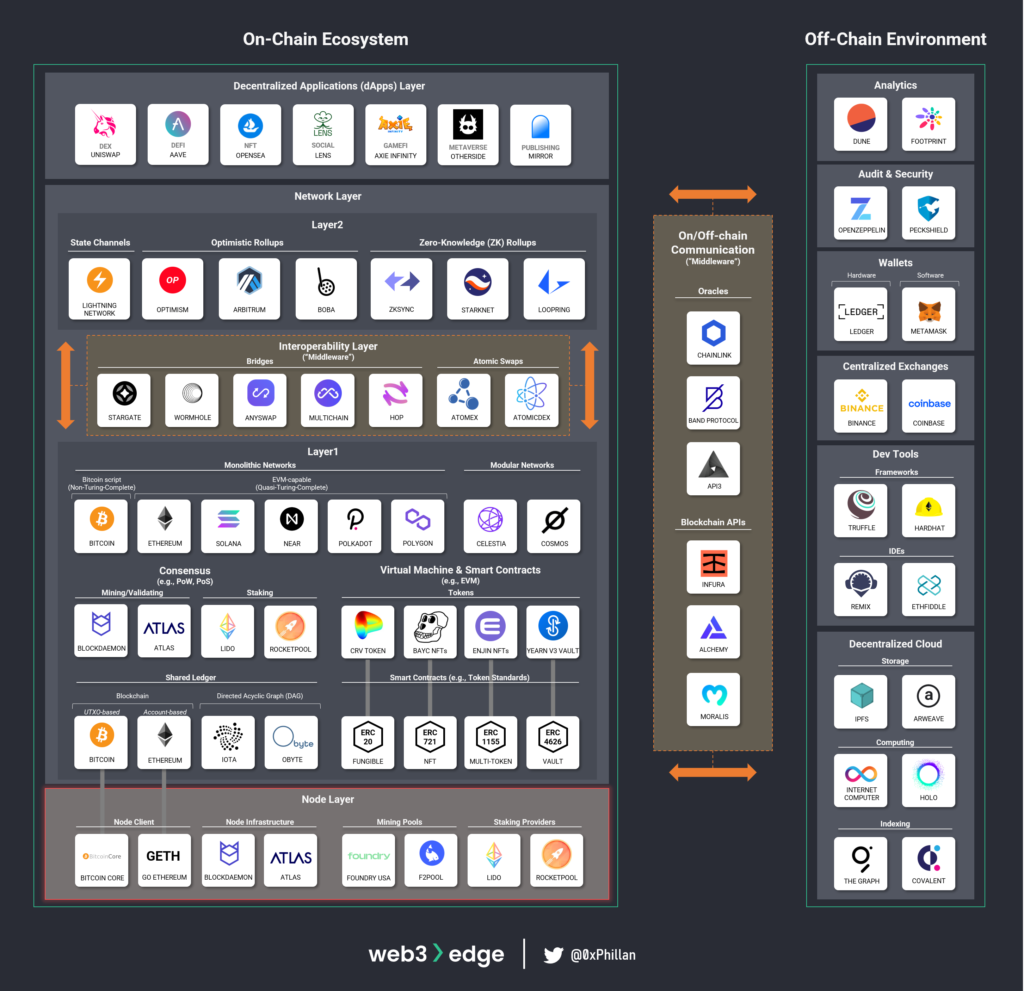

The Node Layer

This layer is also often referred to as the hardware layer, because at this level the hardware and everything related to operating the hardware to participate in a specific blockchain network is set up.

Node Client

A node is a server that runs a network-specific software called a client, which allows the node to participate in the network’s block-creation process, gives access to the entire blockchain’s historical data and allows for RPC commands to be executed (more on that in the Layer1 section). RPC stands for remote procedure call, which allows certain commands to be called and executed by the node.

At the time of writing this piece, the two largest blockchain networks by market cap are Bitcoin and Ethereum. While there are different requirements to participate in each network, they both require a server (any computer) that meets the client’s hardware specifications, an internet connection, and the client software. For Bitcoin, the most popular client software is Bitcoin Core, while for Ethereum the most popular client is GETH (Go Ethereum).

The client also codifies the blockchain’s rules and ensures that any new block that is validated also abides by the same rules. This is important, because if a node validates a block that other nodes do not accept, the network gets forked: one set of nodes follows one set of rules, while the remaining nodes follows another set of rules. While they may share the same history, at the moment that different validation rules are introduced a new chain is created and accepted only by the nodes that accept the new rules.

While the above are the most popular clients, they are not the only clients that can be used to participate in blockchain networks. As long as other clients use the same validation rules, they can validate blocks and contribute to the blockchain.

To read more about how blockchains work, skip to the Layer1 Networks section.

Node Infrastructure Providers

The average user is often encouraged to run their own nodes to support decentralization of a public network. When more users run their own nodes, there is a lesser chance that a single actor can accumulate a majority of running nodes and attack the network. Users are encouraged to run their own nodes through block rewards and transaction fees, which networks distribute to node operators.

Despite these incentives, there are many reasons why a user would not want to set up their node on their own: complex technical set up, limited up-front capital to purchase the necessary hardware or only requiring the node temporarily. This is where node infrastructure providers come in. These providers take care of node set up and operation, providing an end-to-end service for clients. Some larger providers that specialize in node infrastructure include Blockdaemon and Atlas.

An often-overlooked purpose of these node infrastructure providers is to set up nodes for new blockchain projects that have yet to build a strong and decentralized node network. These newer networks can utilize node infrastructure providers to bootstrap a globally distributed network without the hassle of setting up their own infrastructure in each country.

Mining Pools and Staking Providers

While node infrastructure providers set up nodes for clients, mining pools and staking providers operate their own nodes but allow users to pool their resources under their nodes. This increases the likelihood of the nodes receiving block rewards and transaction fee revenue from the network. For users that want to put their idle hardware to use, this means they can join a pool without any complex technical set up and start earning revenue with their existing resources.

There are some nuances to node operations across network with different consensus mechanisms. Essentially, networks that use Proof-of-Work pool computational resources, while Proof-of-Stake networks pool network tokens. For Proof-of-Work networks mining pools heavily reduce the technical barriers to entry, while for Proof-of-Stake networks staking providers heavily reduce the financial barrier to entry (minimum stake required). More details are covered in the consensus section.

Some of the biggest mining pools include Foundry USA and F2POOL, while some of the biggest staking providers include Lido and Rocketpool.

Summary – The Node Layer

The node layer of Web3 consists of thousands of globally distributed nodes, where each node that is part of a specific network runs the client software required for that network. As long as the validation rules of the client software are the same as the rest of the nodes on the network, the node can operate normally without causing a fork in the blockchain.

While anybody can run their own node on a public decentralized blockchain network, node infrastructure providers specialize in setting up and operating the hardware required to run a node and bootstrap a network.

Finally, mining pools and staking providers act to reduce the entry barriers to entry for mining and staking operations. This allows users to participate in mining and staking activities and earn network incentives without having to meet the full network requirements.

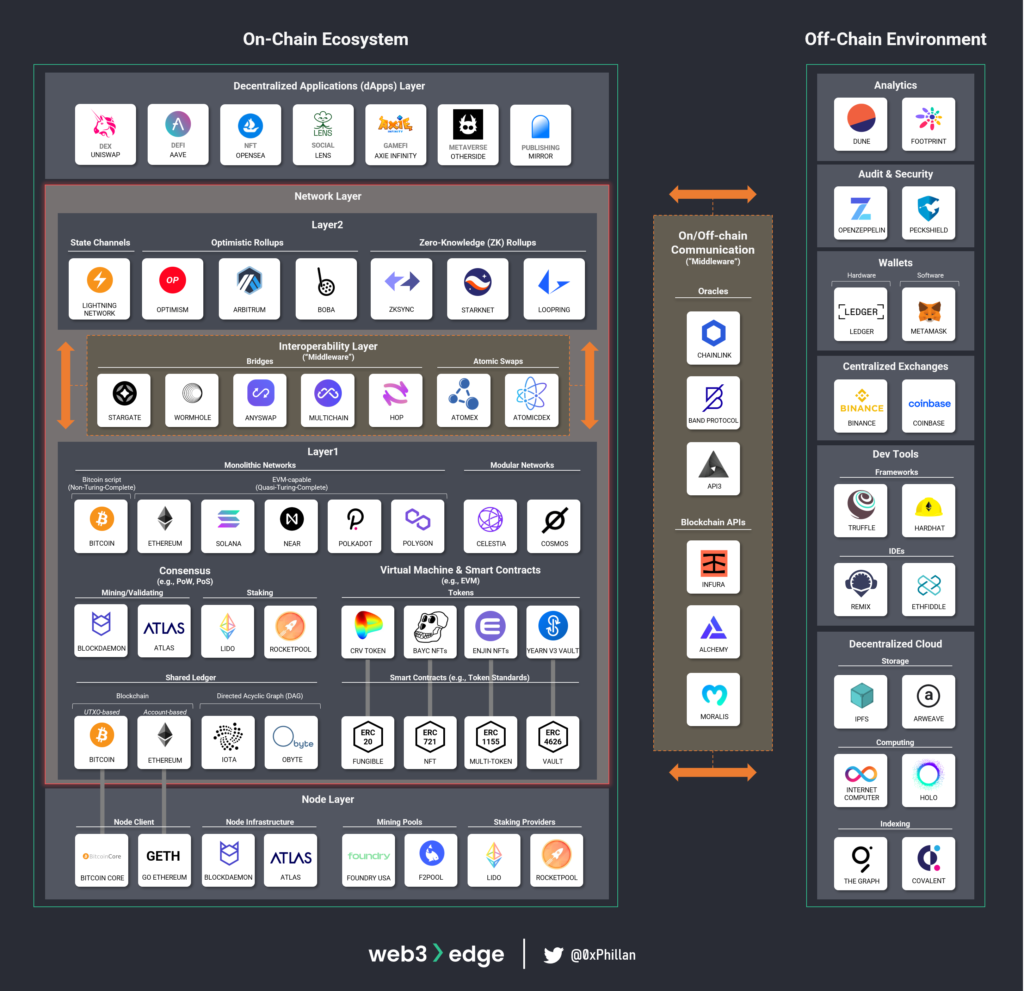

The Network Layer

Blockchain networks live on the node infrastructure mentioned above. The network layer consists of various parts and comprises a wide range of technologies with the essential layers being Layer1 networks, Layer2 networks and an Interoperability Layer to communicate between these networks.

Layer1 Networks

Bitcoin, Ethereum and Solana are probably the best-known Layer1 networks at the time of writing. A Layer1 network refers to the main network of a Web3 ecosystem that settles transactions. Layer2 networks exist as a deeper layer to Layer1 networks on which transactions can be off-loaded to (more on that in my next piece). While architecturally quite different, they all rely on a similar set of architectural primitives:

- they each have a shared ledger which tracks transactions on the network

- they each employ mechanisms to achieve consensus relating to which transactions and blocks are to be considered valid

- they each have a way to compute commands sent to the network (virtual machines for Ethereum, Solana and other EVM-compatible chains, and Bitcoin Script for Bitcoin)

In the following sections we will look at each of these three elements and dissect how we get from transactions to blockchains.

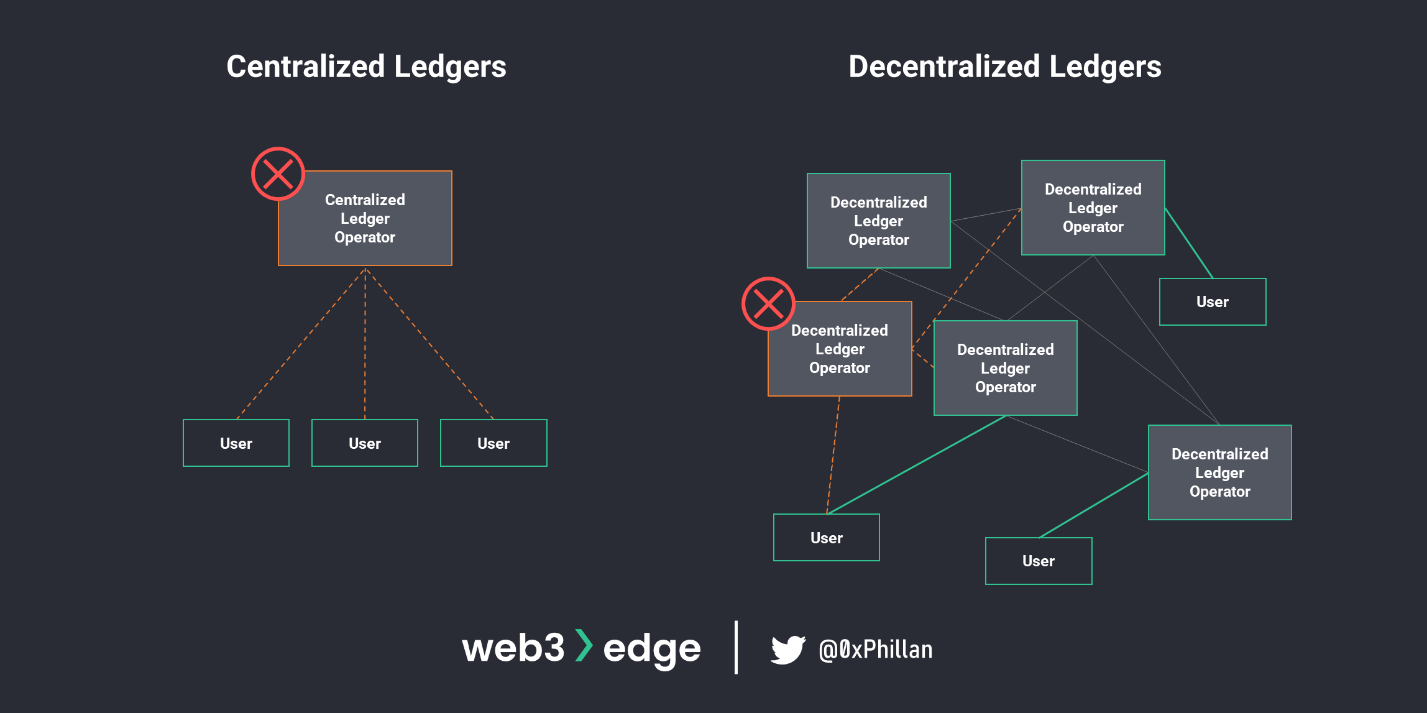

Shared Ledger

All decentralized blockchain networks have a shared ledger. In fact, the blockchain is the shared ledger. Let’s take a step back: A ledger is a record of a business’ economic activities, used to track the transfer of money or the transfer of asset ownership. The term shared ledger means that the ledger is not held and managed by one single entity, but that it is held and managed by many entities.

In decentralized blockchain networks, the blockchain (ledger of all activities on the network) is saved on all nodes on the network. If the ledger of activities were managed and stored by only one centralized authority, we would run into the below challenges:

- Censorship and exclusion (see users deplatformed by PayPal)

- Malfeasance by record-keepers (see Luckin Coffee misstate their financials)

- Loss of records (see the destruction of the library of Alexandria)

If the ledger is stored across many hundreds if not thousands of nodes globally, we get a system that is very hard to tamper with or damage, both intentionally and unintentionally. If one node falls away, there are many other nodes that a user can connect with to continue interacting with the ledger.

However, this system does introduce other challenges: how do the nodes on the network agree on what is a correct or valid ledger entry? This is where consensus algorithms come in.

Consensus

In blockchain networks, the term consensus refers to a general agreement between nodes on the network about which ledger entries (transactions and blocks) are valid and to be accepted by the nodes.

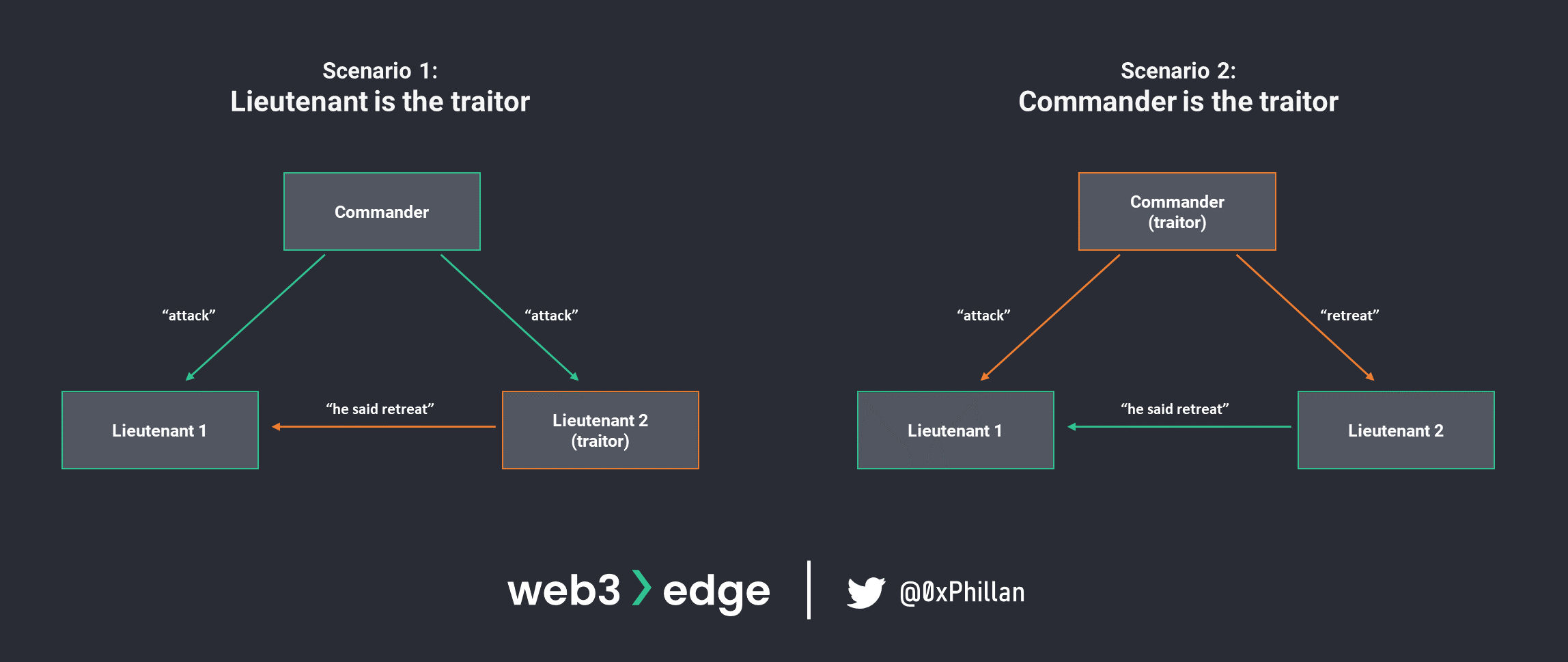

In academia, this problem is known as the Byzantine General’s Problem. This problem describes a situation in which a system’s actors must agree on a strategy to avoid catastrophic failure, but some of the actors in the system are unreliable.

In this imaginary scenario, there are three actors, and they must coordinate their next step in the Byzantine war to avoid being overrun by the enemy. One of the three actors is malicious and relays inconsistent messages to the remaining parties. How can the honest (non-malicious) actors in the system know who to trust? Or phrased differently: how can all actors in the system reach consensus about which message to accept?

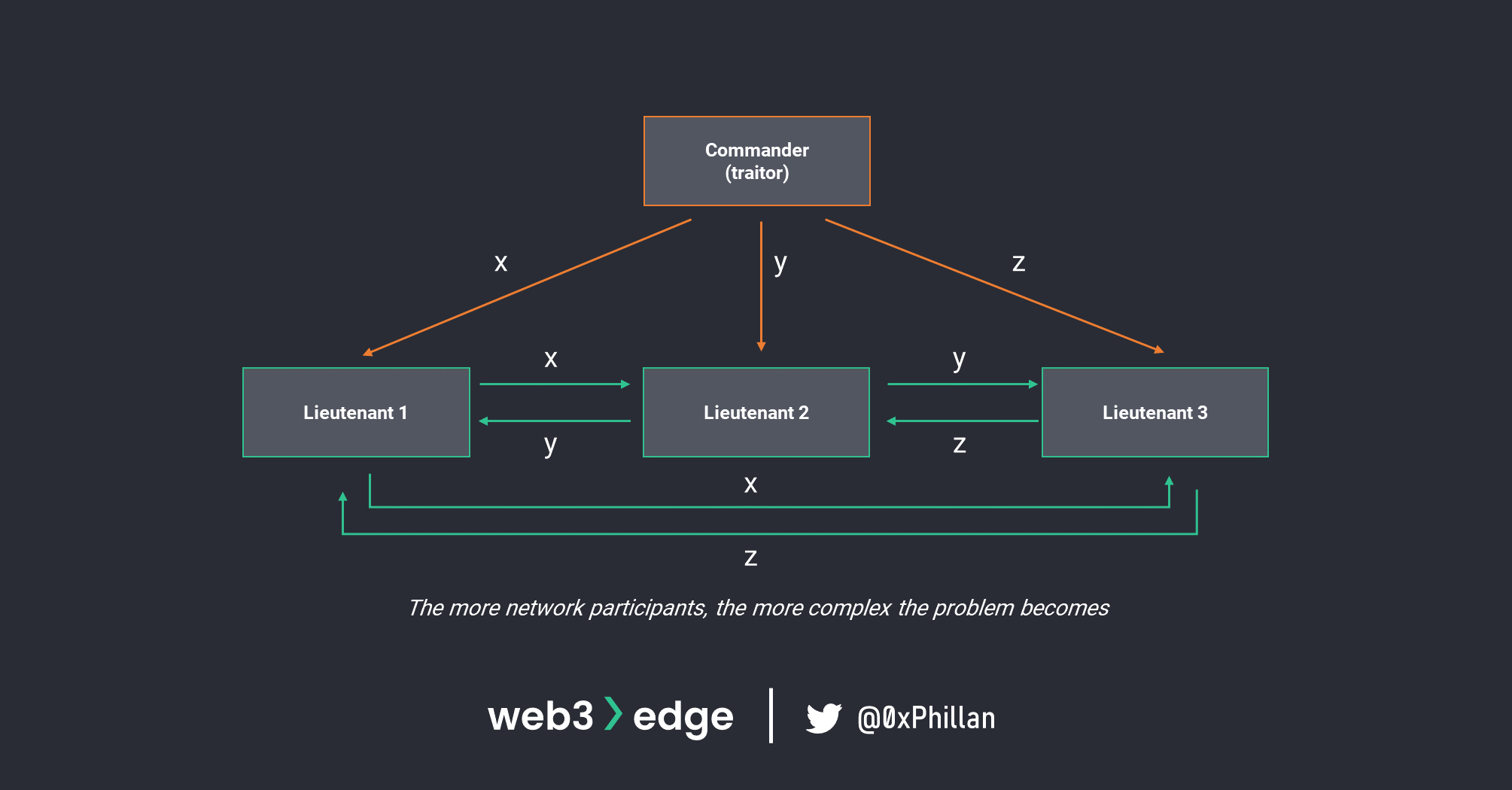

This question is of significant importance, because as more actors enter the system, the complexity of (mis)communication grows exponentially.

The first system to successfully address this challenge on a global scale was Bitcoin with its Proof-of-Work algorithm.

Proof-of-Work (PoW)

Bitcoin’s Proof-of-Work algorithm (also referred to as PoW) solves the Byzantine General’s Problem by requiring any message to have undergone a sort of validation for it to be accepted by nodes. Any message that is sent that has not undergone that validation is not accepted as valid and is declined by nodes.

The validation process also requires computational resources, which makes faking a validation prohibitively difficult. This is also where the term “Proof-of-Work” comes from: “prove to me that you have done the necessary work for me to accept your message”.

Let’s move from theory to practice and dig a little deeper into transactions, blocks and the mechanics of the PoW process. Don’t worry – we’ll keep it simple!

Enjoy what you’re readying?

Subscribe to the Web3edge newsletter and never miss new content!

The Block Structure

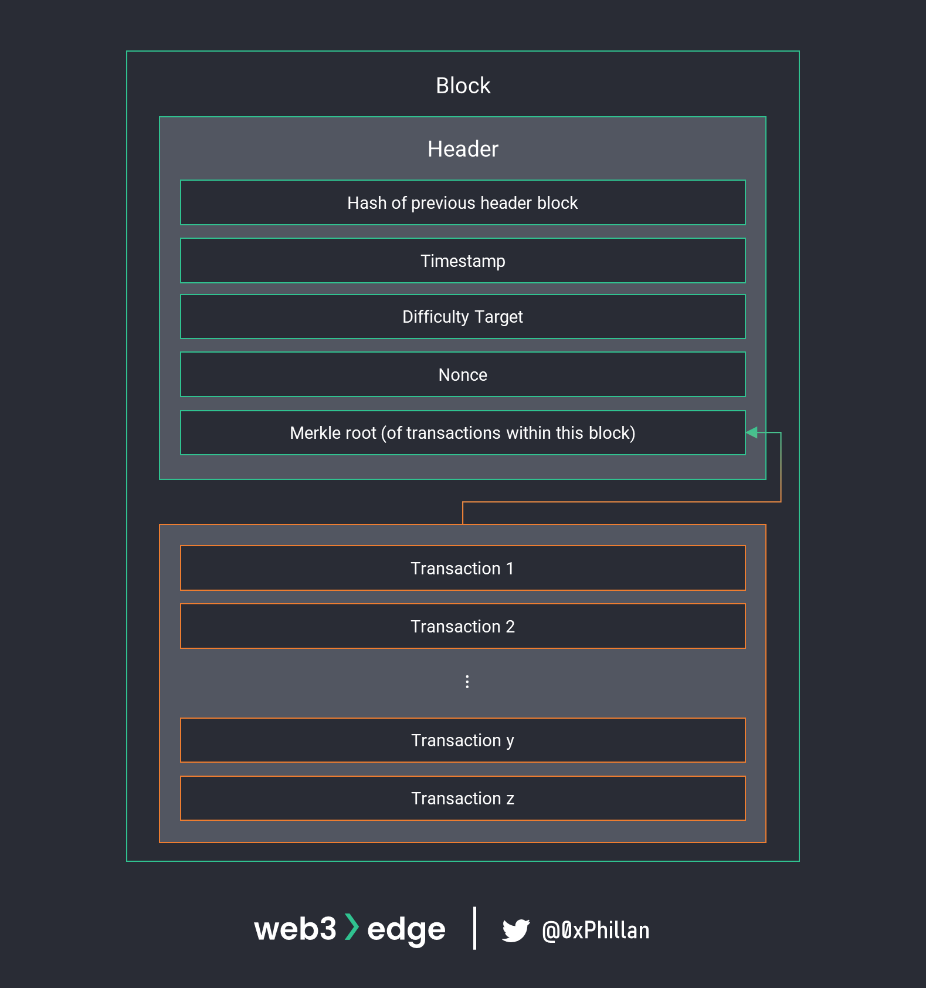

A Bitcoin block is where transactions are stored and are carefully controlled units of information that, once the cryptographic puzzle has been completed, are broadcast across the network.

A block in Bitcoin consists of two main parts:

- Block header

- List of transactions

The list of transactions is just what it sounds like: it’s a list of transactions that the node has received and includes in a block. In Bitcoin, a transaction is the transfer of bitcoin on the Bitcoin network (note: bitcoin with a lowercase b refers to the bitcoin asset, while Bitcoin with a capital B refers to the Bitcoin network). The Bitcoin network is a shared public ledger that tracks movements of bitcoin assets; hence a transaction on Bitcoin is the transfer of bitcoins between addresses.

Bitcoin uses unspent transaction outputs, also known as UTXOs for transactions. Transactions and UTXOs are covered further in the UTXO Model vs Account Model section.

The block header is where things get interesting. While the number of transactions and the amount transferred in each transaction vary by block, the elements of the block header are the same for every transaction.

While the block header includes many elements, each of vital importance to the system, for introductory purposes we will cover the below in further detail:

- Hash of previous block’s header: all elements of the previous block are hashed

- Difficulty target: determines the amount of ‘leading 0s’ and thus mining difficulty

- Nonce: an arbitrary number (nonce is short for “nonsense”)

- Merkle root: hashed output of all transactions within this block

From Block to Blockchain

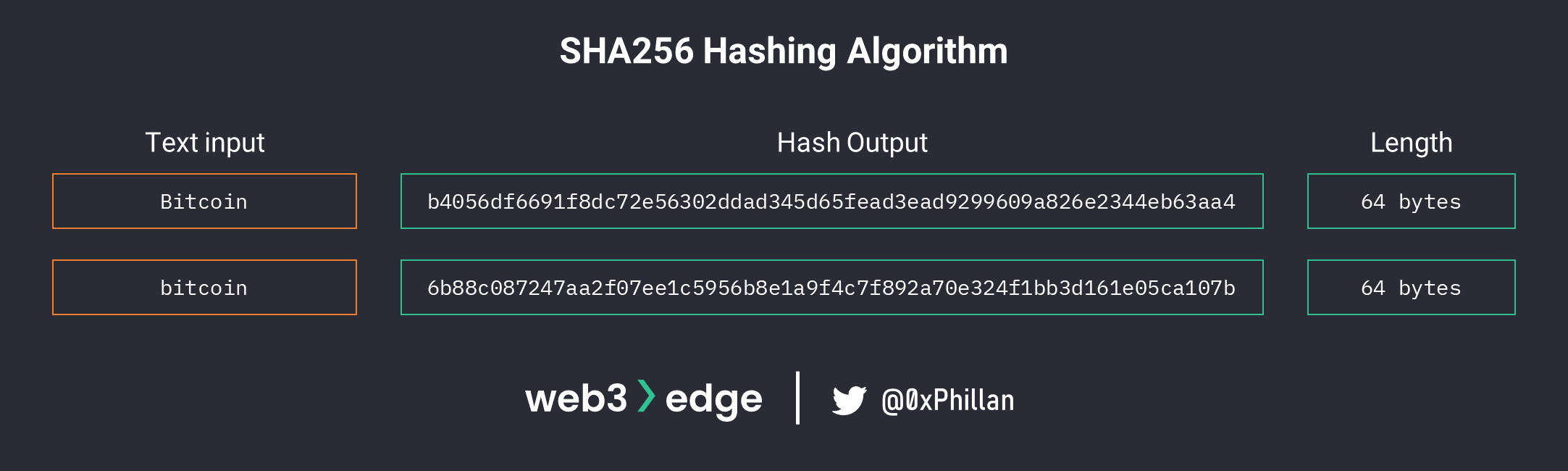

Before we proceed, we need to briefly cover hashing. Hashing is the process of transforming a string of characters into another, usually fixed-length, value. When a hashing algorithm is deterministic, it means that with the same inputs, the outputs will be the same every time. However, if one character of the original string changes, the hash output changes entirely so much so that no relationship to the original string can be inferred. See below comparison of Bitcoin vs bitcoin SHA256 hashing algorithm output.

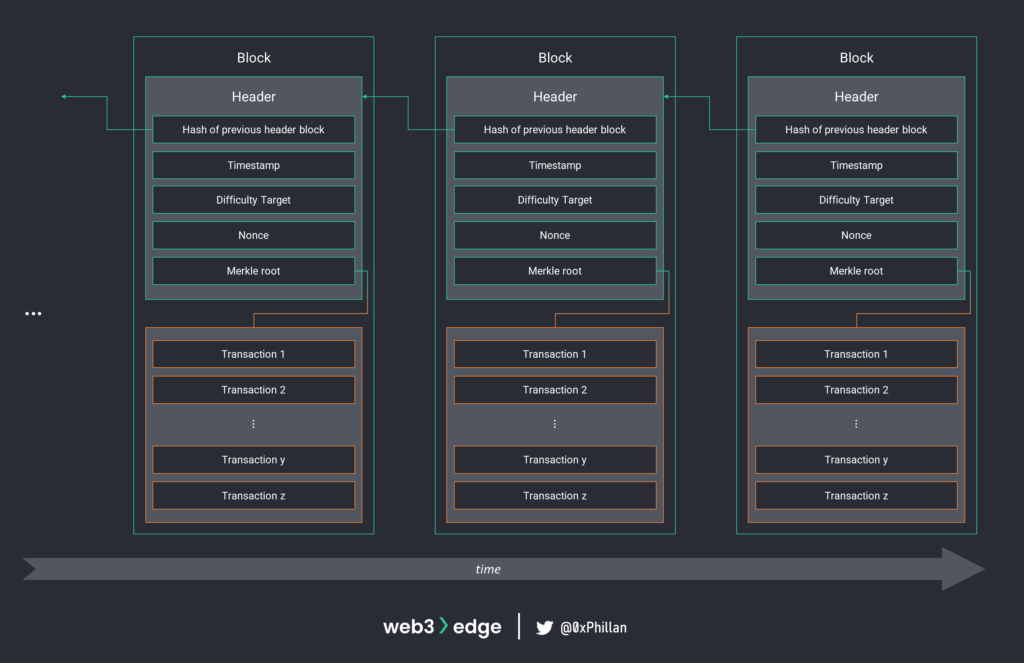

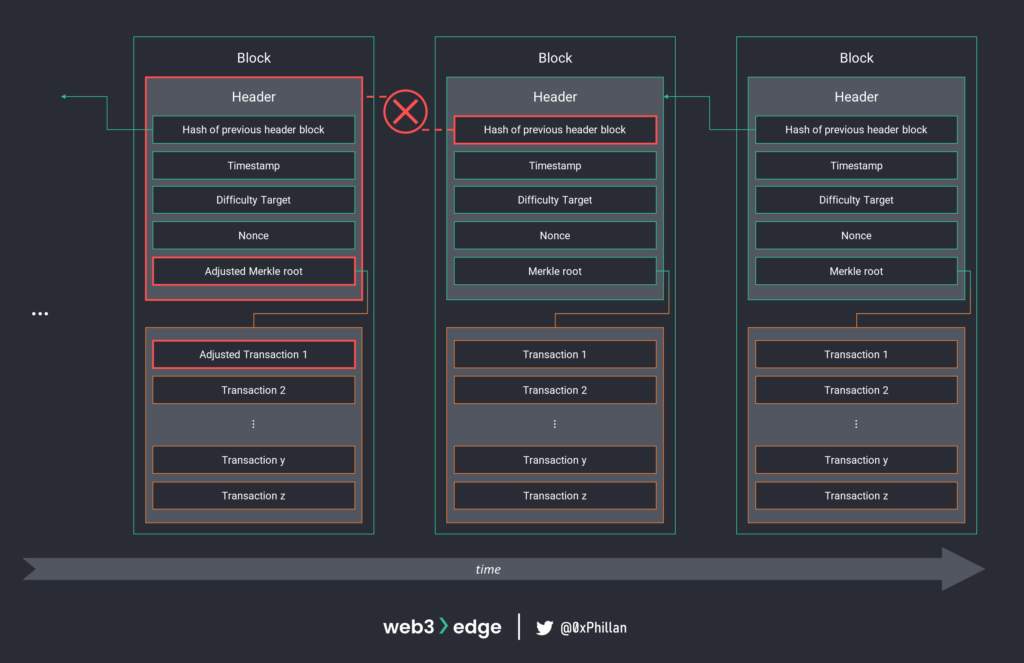

In Bitcoin, once a block is mined the header of that block is hashed and included as an input in the next block. Because the hash of the previous header of each block is included in the next block, a chain of blocks is created: this is the blockchain.

Any change in any block would break the chain because the hashed output that was already included in the next block would be different to the new hashed output. Such a change is thus rejected by nodes on the network.

Merkle Root

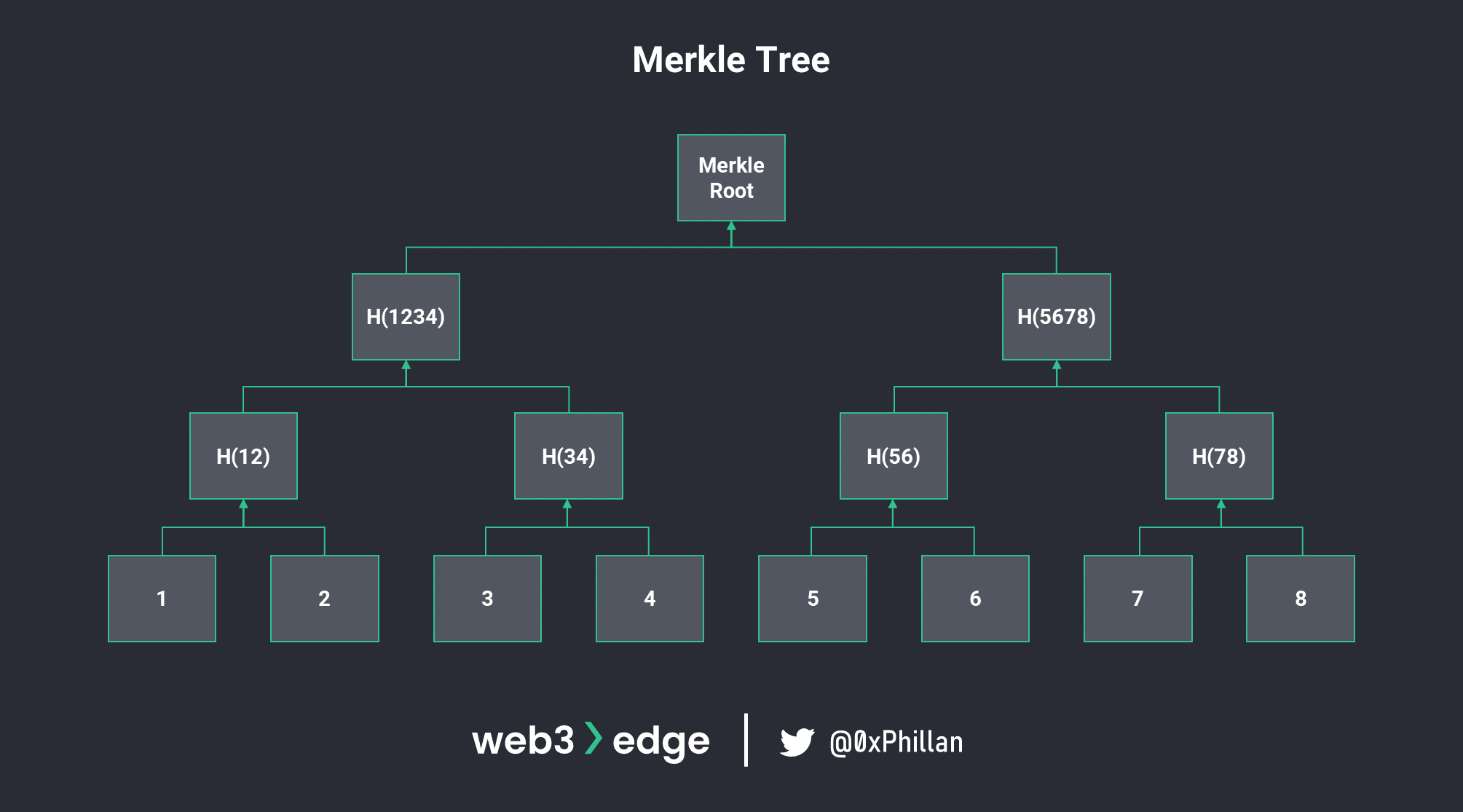

Merkle tree is a data structure in which elements of a data structure are hashed and re-hashed recursively until only one element remains. That remaining element is the Merkle Root.

Merkle trees have an interesting mathematical characteristic, in that it is mathematically possible to prove that an element is part of a Merkle tree with only the Merkle root and the element.

In Bitcoin, the Merkle root that is stored in a block header is a recursive hash output of all transactions included in that block. This means that if any transaction were to be adjusted, the Merkle root would also change, which would also change the hash output of the entire header. This would, again, lead to an invalidation of the block.

The “Work” in Proof-of-Work

Now that we know what hashing is, how blocks are structured and how blocks are chained to form a blockchain, we can finally dig deeper into how Proof-of-Work actually works. Going back to the Byzantine General’s Problem; the validated message mentioned above is, in fact, a block in the blockchain.

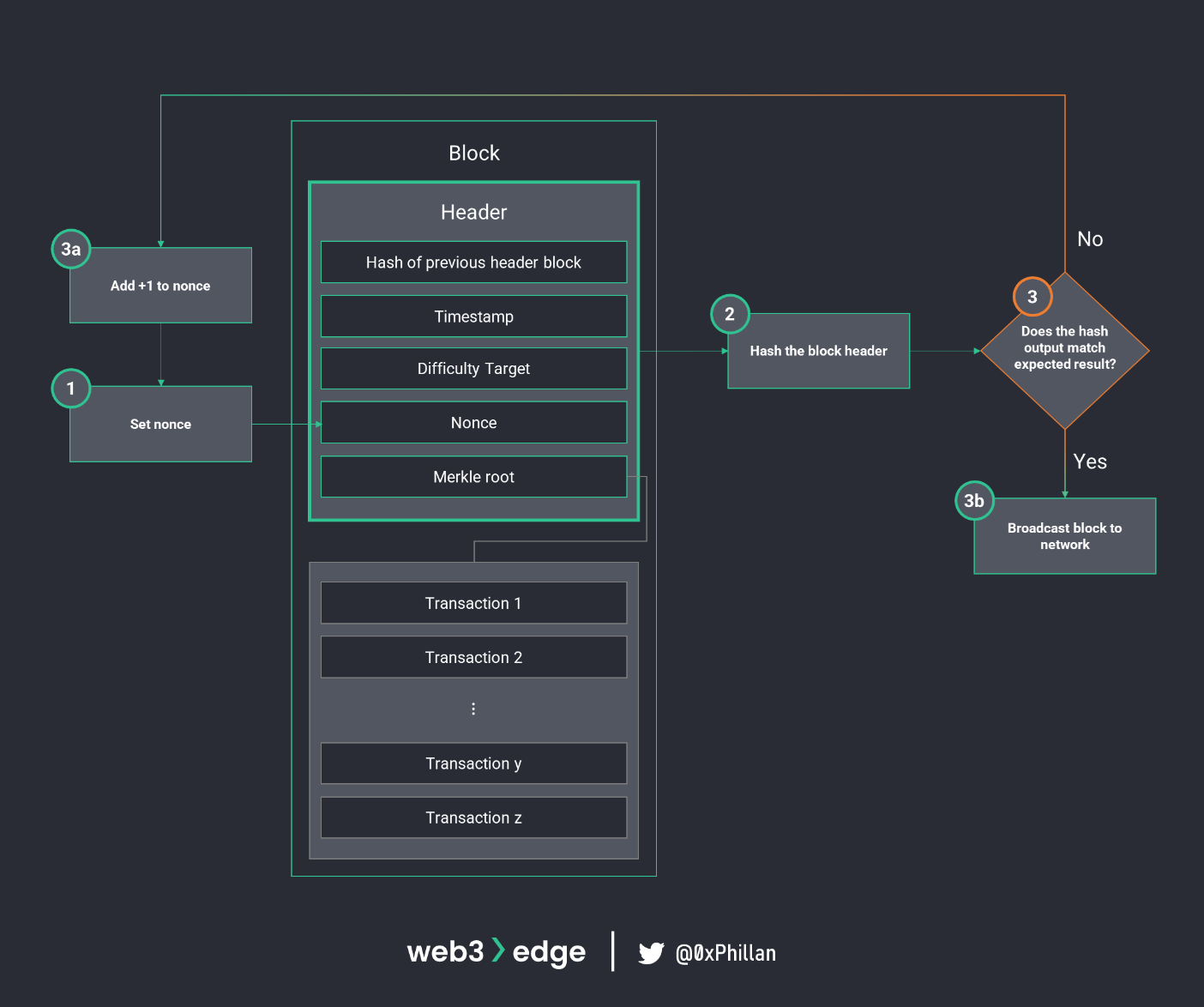

For a block to be validated, a hash needs to be found that meets specific criteria. Remember how one change in just one single bit will drastically change the hash output? This is exactly how Bitcoin’s PoW algorithm searches for the target hash: the nonce, which is an arbitrary number, is adjusted to change the block header’s hash output. If the hash output doesn’t meet the target hash, the nonce is adjusted again. This process is repeated until the block header hash meets the target condition. Once the target condition is fulfilled, the block header counts as validated and the block is broadcast to other nodes on the network to append the new block to their copies of the blockchain.

The target condition, or the expected hash, is defined by how many leading zeros it has. If a hash is generated with sufficient leading zeros, i.e., the work has been done to find a hash that meets the target conditions, then the nodes on the network accept that block as valid: the block is considered “mined”.

To better understand this process, navigate to this hashing algorithm simulator on Github. Input the characters “bitcoin” and append numbers at the end, starting from 0 and keep increasing the number by an increment of 1 until you reach one leading zero (e.g., bitcoin0, bitcoin1, etc.). You will notice, in order to find one leading zero, i.e., the first character in the hash is a zero, you only need to increment the number to 3 (“bitcoin3”). Now try to find two leading zeros. Spoiler: the first hash with two leading zeros results from “bitcoin230”.

There are further rules that nodes abide by, such as the longest chain is always the valid chain (preventing the entire blockchain to be overwritten), blocks that have been mined must have a timestamp within a certain threshold of network time (so that the most recent blocks cannot be overwritten) and there are also complex mechanics around how the network difficulty (amount of leading zeros for the target hash) are determined. Readers interested can explore Bitcoin.org or the Bitcoin Wiki for more details.

The Paradigm Shift

The above mechanism, for the first time in history, allowed for transactions to be independently confirmed and validated without having a third-party witness and approve the transaction. Instead of submitting a transaction to a bank, which suffers from centralization challenges, it is sent to a network of independent nodes that can autonomously process the transaction without intervention. This technological paradigm shift and reimagining of ledgers are the fundamental primitives on which today’s Web3 ecosystems are built.

Furthermore, because the only requirement to join these networks are computation devices that can run the node software and an internet connection, anybody can join the network as independent nodes, enhancing the decentralization of the network.

Criticisms

While PoW networks such as Bitcoin have many nodes (nearly 15,000 as of September 15th 2022 according to bitnodes.io), there are criticisms that the barrier to entry for individual nodes is too high as a result of the high competition on the network. The more hash power (i.e., computational resources) a node has, the greater the likelihood that node will be able to solve the hash puzzle first, because it can do more calculations at a faster pace than other nodes on the network. Entering the Bitcoin network as a single node with low hash power would result in energy costs with a nearly non-existent chance to be the first successfully mine a new block.

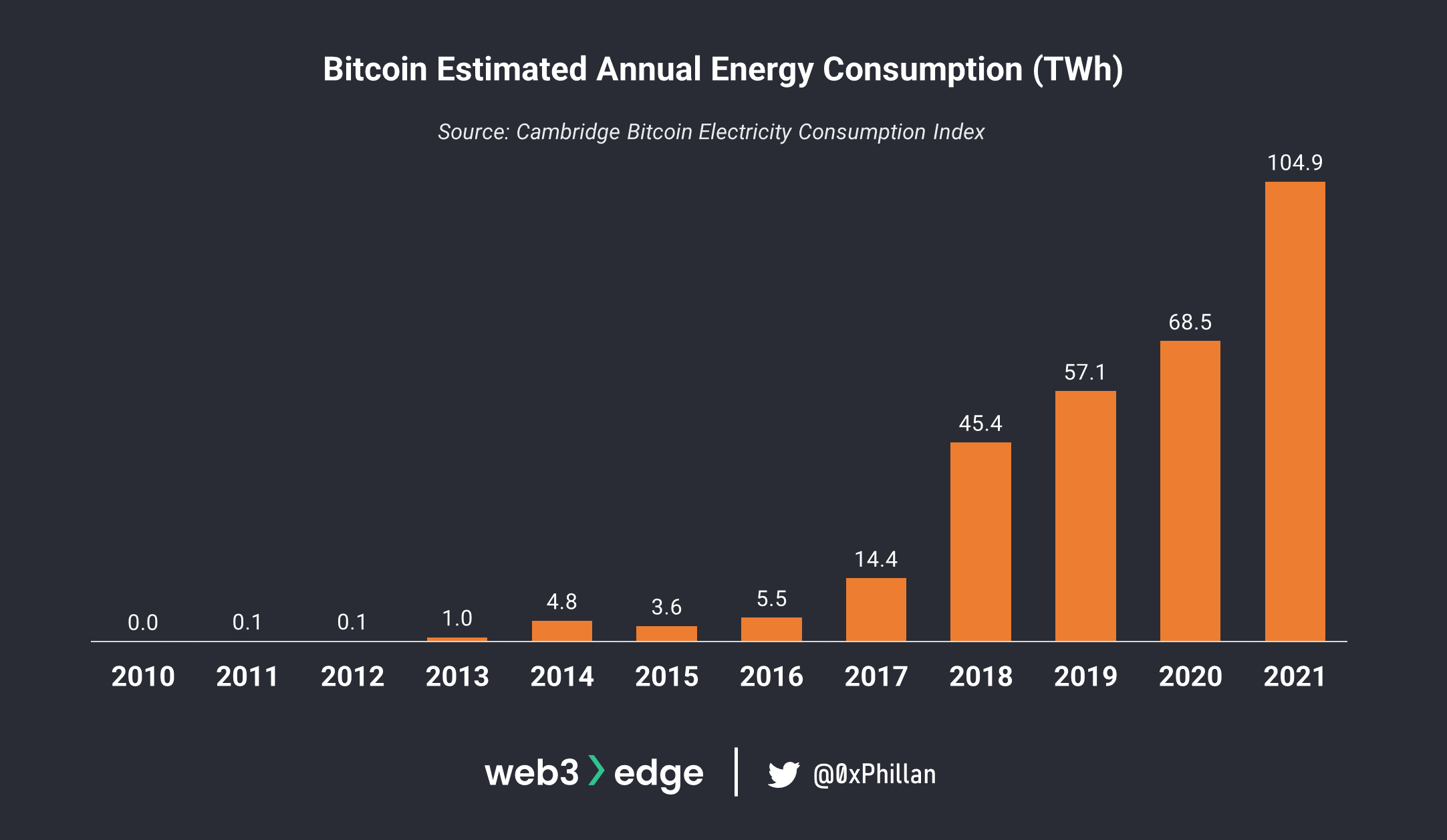

Energy consumption is also a highly-debated topic: the network requires huge amounts of energy, with some estimates stating Bitcoin’s annual energy consumption exceeds that of Norway.

This energy is wasted on nodes doing millions of hashing calculations every second, looking for a hash. While this adds security to the Bitcoin network, it does beg the question whether there are any less wasteful approaches to validating blocks. This is where Proof-of-Stake comes in.

Proof-of-Stake (PoS)

In Proof-of-Stake nodes are given the privilege to validate a block based on their stake in the network. This is a fundamentally different approach to PoW and heavily reduces the computational power required to validate. Instead of providing computing power, nodes put up their native network tokens as collateral in exchange for the chance to validate blocks. This essentially erases competition-based computation and increases the spread of nodes that are able to successfully validate blocks.

Post-merge Ethereum is a Proof-of-Stake network. It requires 32 ETH to be staked to become a validator, after which the node can participate in block validation, thus contributing to new blocks being added to the network. Staking refers to the locking up of tokens, and is the basis for PoS networks.

Apart from the high upfront cost of becoming a validator, PoS networks also employ other methods to prevent malicious actors from messing with the network. Generally, PoS networks also require multiple nodes to validate the same block simultaneously, which reduces the likelihood of a node validating an incorrect or malicious block. Furthermore, if a node is found to behave maliciously, their stake can be slashed. This means that the amount of network tokens they have locked with the protocol are removed from the node and either moved to a temporary address or burnt. Burning of tokens refers to permanently removing the tokens from circulation by sending them to an address that nobody on the network has access to. On Ethereum, this is the null address.

Other Consensus Mechanisms

Apart from Proof-of-Work (PoW) and Proof-of-Stake (PoS), there are many more consensus mechanisms that are designed for specific networks with specific purposes. Below is a non-exhaustive list of popular consensus mechanisms:

- Delegated Proof-of-Stage (DPoS)

- Proof-of-Authority (PoA)

- Proof-of-Activity (PoA)

- Proof-of-Brun (PoB)

- Proof-of-Spacetime (PoSt)

- Proof-of-History (PoH)

- Practical Byzantine Fault Tolerance (pBFT) Consensus

Shared Ledgers – Accounting Systems (UTXO vs Account Model)

Earlier we mentioned that blockchains are blocks of data that are cryptographically chained to each other through hashing, which creates a ledger. This ledger is saved across thousands of nodes across the network, making the ledger ‘shared’ amongst these networks. Any ledger, no matter if a shared blockchain ledger or a traditional accounting ledger, requires bookkeeping. Bookkeeping refers to how transactions are accepted, executed and the new balances stored on the blockchain. In Web3, there are two primary bookkeeping models:

- The Unspent Transaction Output (UTXO) Model (e.g., Bitcoin)

- The Account Model (e.g., Ethereum)

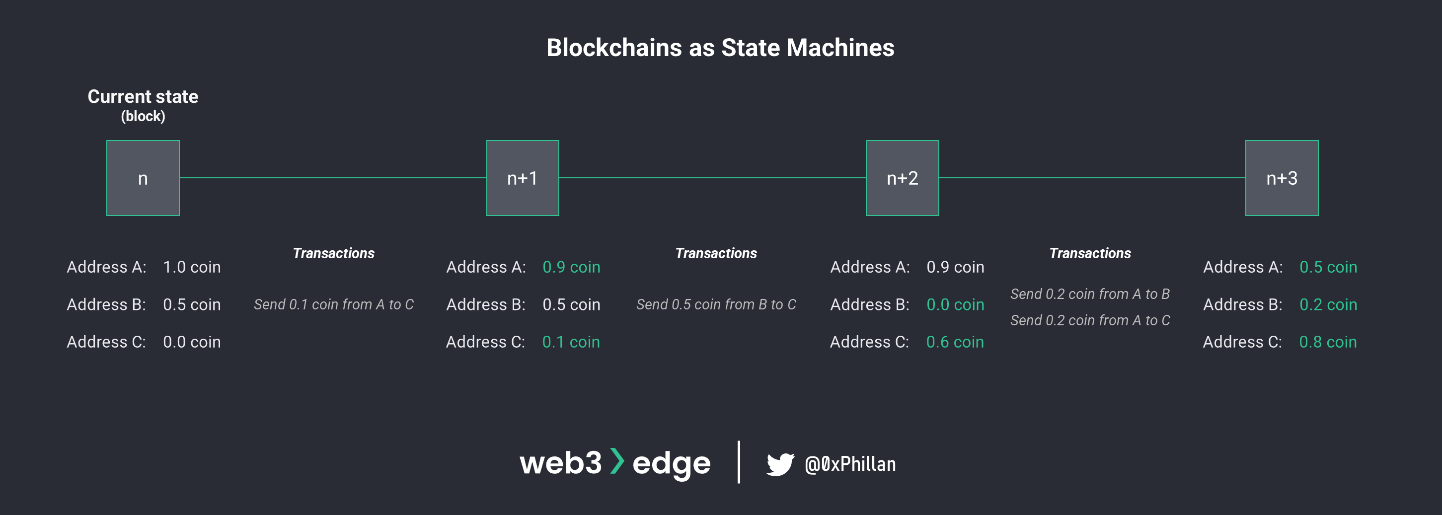

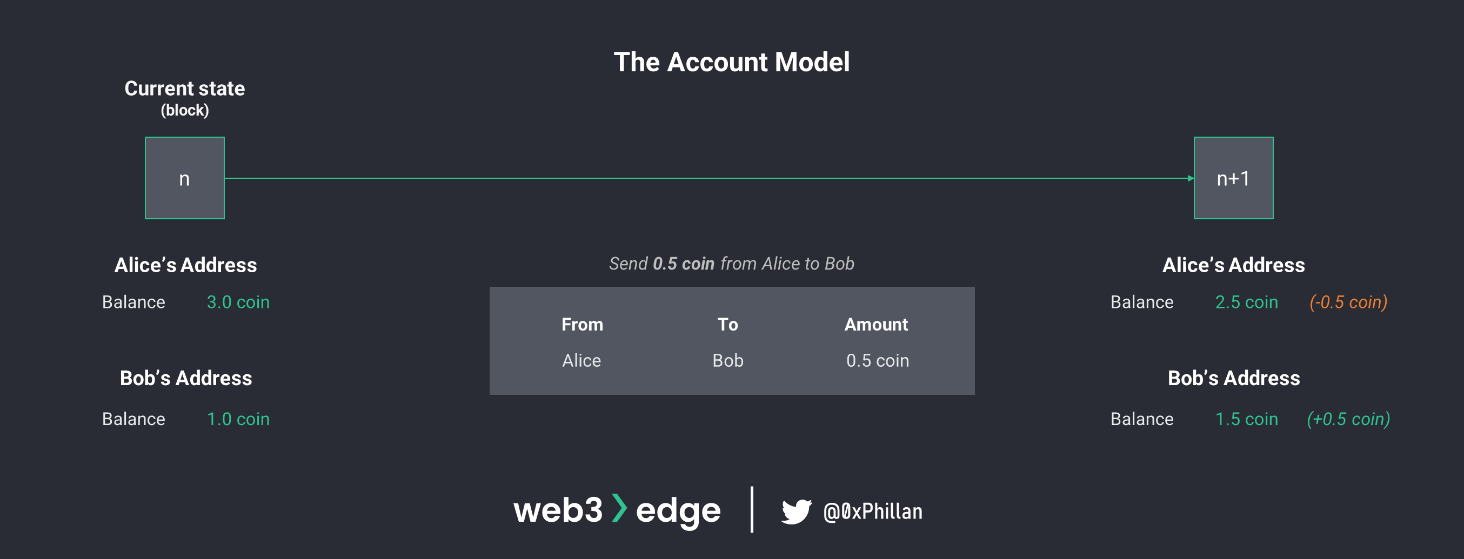

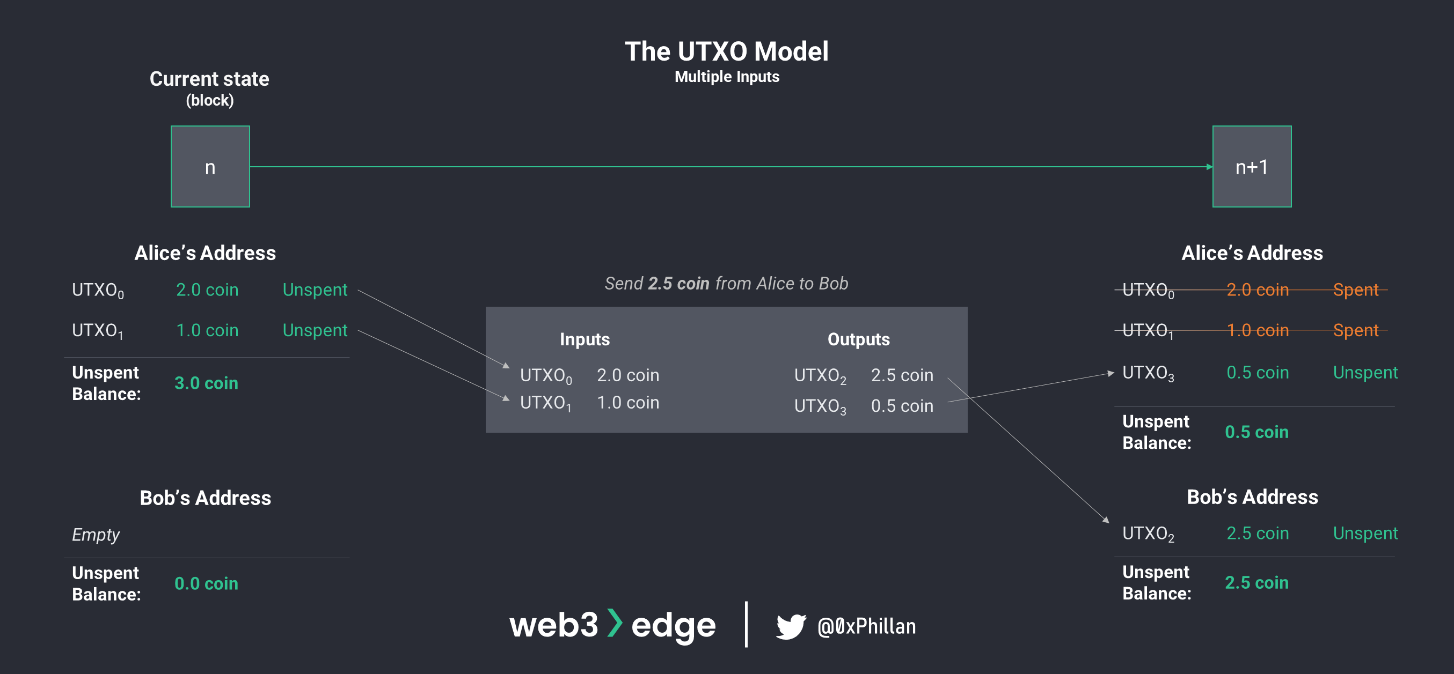

To aid in comprehension of these different bookkeeping models, it helps to think of blockchains as state machines. A state machine is a system that stores its state, and its state can change based on inputs to the device. This means that at any given point in time, the system is in a certain state, and with any inputs to the system, e.g., through transactions, the state of the system changes. When inputs are provided to the system and the state changes, the system undergoes a state transition.

If we look at blockchains through the lens of state machines, this means that at any given point in time the blockchain system is in state n and any block that is added to the blockchain leads to a state transition and a new state of n+1. This new state of n+1 takes into account all transactions that were included in the new block that was added, leading to a new system state.

The Unspent Transaction Output (UTXO) Model

The difference between UTXO model and Account Model is in how bookkeeping – or the recording of transactions – is handled.

Without going into too much detail; in the UTXO model, there is no such thing as an account balance. Instead, every transaction is a receipt noting how much from who was sent to whom. This is where the name Unspent Transaction Output comes from, because the balance a user can transfer is however much of previous transactions they have not spent yet.

When a user wants to send bitcoins, all bitcoins within the selected UTXO become transaction inputs (see UTXO0 above). A new UTXO is created with the amount to be sent (see UTXO2 above). If the UTXO holds more bitcoins than are to be sent, the remaining bitcoins are sent back to the user as a new UTXO (above 0.5 are to be sent, but 2.0 are held in UTXO0, so UTXO2 contains the 0.5 to be sent and UTX03 contains 1.5 that are returned to the sender).

This also enables an interesting characteristic: as a result of the UTXO model, the origin of every single native token can be traced back to its creation, because every transaction output must have a corresponding input. For Bitcoin, which uses the UTXO model, that means every bitcoin can be traced back to the block in which it was mined. As a result, the concept of balances does not exist in the UTXO model. Instead, balances are an aggregation of all transaction receipts in the network.

Every transaction on the network precisely defines who gets how much bitcoin from which transaction input. The system then verifies that transaction inputs are unspent and that the sender has the authority to send the bitcoins as well as the recipient meets the right parameters to receive the bitcoins. Thus, the UTXO model can be thought of as a verification system.

While not included in the previous example, transaction fees which go to miners are also deducted as part of the transaction. Instead of 1.5 coin, UTXO3 is likely to be 1.499 coin with the difference being the transaction fee.

The Account Model

The Account model is closer to a digital representation of traditional bank accounts. At every state transition a set of all accounts and balances is stored, instead of a set of receipts from which account balances must be calculated like in the UTXO model. To start a state transition, a transaction needs to be initiated which instructs the system to change the balance. The system then computes the changes of balances in each account and in the next state the new set of balances is stored.

In the UTXO system, every transaction input (UTXO received from previous transactions) is individually validated and must be greater than outputs, while in the Account model the account balance must be greater than the transaction outputs. This means that in the UTXO system, multiple UTXOs can be combined and individually validated, to create one or multiple transaction outputs, while in the account model only the balance needs to be validated.

For more information about the UTXO model vs the Account model, I highly recommend reading this write-up by Horizen.io on the subject.

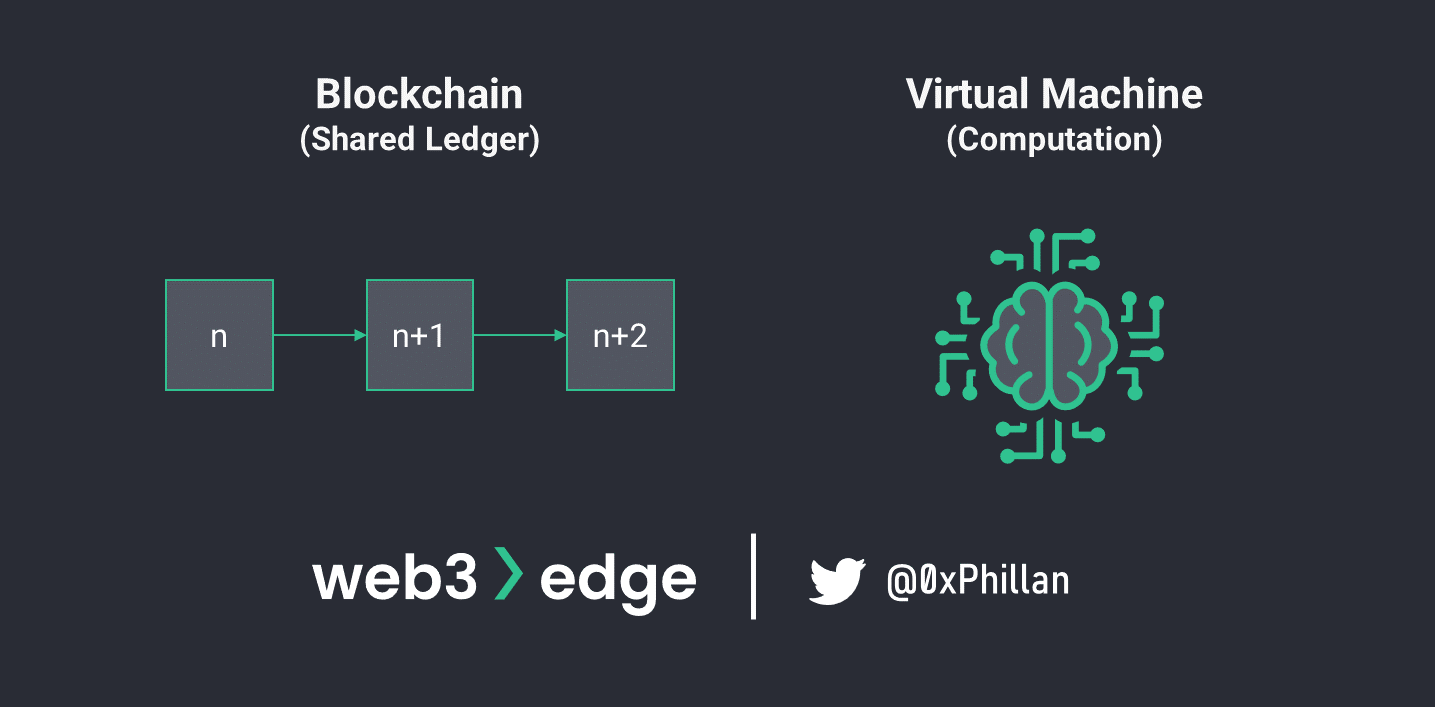

Virtual Machines (VMs), Smart Contracts & Turing Completeness

A virtual machine is a piece of software that emulates a computer. Instead of a physical device, all physical components of the virtual computer run as software within another system. For example, a Windows VM can be run on MacOS, allowing an entire windows system to run inside of MacOS. The physical components of the windows machine are emulated in software, so that the Windows system is none the wiser.

This same concept is applied to blockchain networks as well: a separate virtual machine component exists alongside the shared ledger, which allows for computation tasks to be executed. This means that apart from a shared ledger in which balances (Account model) or changes to balances (UTXO model) are stored, there is a separate computation component that calculates the balances. This computation component can also be used for more complex logic beyond simple balance calculations. This is what paved the way for smart contracts – more on that later. The first such system that was widely successful was the Ethereum Virtual Machine (EVM).

Bitcoin Script can also be considered a virtual machine, as it is the computation component of the Bitcoin network which nodes use to validate UTXOs and execute transactions. Bitcoin Script, however, is quite limited and incapable of running complex logic like the EVM.

The Ethereum Virtual Machine (EVM)

The EVM is a piece of software that emulates a specific computer system that runs on Ethereum nodes. The main purpose of the EVM is to compute the world state of the Ethereum network, and to run smart contracts. The novelty of the EVM is two-fold:

- The EVM enables decentralized computation of the world state, including the execution of somewhat complex computing logic of smart contracts

- The EVM enables autonomous and trustless execution of code on decentralized blockchain networks (smart contracts)

When a network claims “EVM-compatibility”, it means that the network can deploy and execute smart contracts that were written for the Ethereum Virtual Machine. The EVM is the most popular virtual machine and has become the de-facto standard for smart contract computation in Web3. Having EVM compatibility allows newer networks to bootstrap their ecosystems by enabling easier porting of projects to their networks. This standardization also makes the bridging of tokens easier between networks, as both networks can run the same code.

For a fantastically self-explanatory deep-dive on the EVM’s architecture, I direct the reader to this walkthrough by Takenobu T. (since “the Merge”, which marks the transition of the Ethereum ecosystem from PoW to PoS on Sep 15th 2022, the PoW aspects of this presentation are out of date).

Smart Contracts

A smart contract is a program that is stored on a decentralized network that can be executed autonomously by the VM when specific conditions are met. These conditions can refer to any conditions that are activated when specific events occur on the network, or when a user interacts with the smart contract. The complex computation capabilities of smart contracts also enable the creation of ERC-20 tokens and NFTs (non-fungible tokens) too.

Smart contracts and the EVM are what pushed the industry beyond blockchain and crypto, enabling the concept of Web3: thanks to these innovations, it is possible to have composable applications that run autonomously on an un-censorable decentralized network. The combination of these innovations is what gave rise to Web3’s vast dApp ecosystems.

A dApp is a decentralized application, which uses a combination of smart contracts and often also an easily accessible web-based front-end to enable interaction with a blockchain network. The smart contracts of dApps can also be accessed directly through a node, however web-based front-ends heavily reduce barriers to access. Probably the most well-known dApp today is Uniswap.

Solidity, Rust and Bitcoin Script

Solidity is the most-used programming language for smart contracts on the Ethereum blockchain. Developers code their smart contracts in Solidity, compile them into bytecode and then deploy the bytecode to the network. Solidity is an object-oriented and statically-typed programming language, which is based on C++, Python and JavaScript.

Rust is one of the most popular programming languages for smart contracts on Solana, Polkadot and NEAR blockchains. Rust is a low-level statically-typed programming language, which is known for its speed, efficiency and design best practices. While it is a younger language, it was voted the most loved programming language on StackOverflow in 2020 and 2021 consecutively. Just like Solidity, the code is compiled and the bytecode is deployed to the various networks.

Blockchains accept various programming languages as long as the code can be compiled into bytecode that the network can read and interpret. This also holds true for Bitcoin, for which the primary scripting language is Bitcoin Script. The difference between Bitcoin Script and Solidity/Rust, is that Bitcoin Script is not actually a programming language, but a scripting system used for transactions. In Bitcoin, a script is a list of instructions recorded with each transaction that describe how the next person wanting to spend the Bitcoins being transferred can gain access to them. Remember that UTXOs are unspent transaction outputs; so every output can have requirements attached which need to be met for the output to be allowed to become an input in another transaction.

Turing-Completeness

The differences between Solidity/Rust and Bitcoin Script become even more clear when looking at them through the lens of Turing-completeness. Turing completeness refers to the idea of an abstract machine (Turing machine) which, given infinite time and computing resources, is capable of computing any problem, as long as the problem can be coded or logically constructed.

More complex logical problems require the use of conditional statements and loops, which Solidity and Rust as full programming languages support. Bitcoin Script, however, does not support these. This is because Bitcoin does not allow for complex computation, but instead relies on a rather simple instruction set that work only around the idea of transactions (no smart contracts). While this makes Bitcoin less error prone and arguably more secure, it does limit its programmability.

Ethereum, Solana and Polkadot can be considered quasi-Turing-complete. Although they are capable of complex computation thanks to Solidity and Rust, and could in theory solve any logical problem given enough time, they are limited by gas fees. Gas fees are fees the network charges for the execution of any computational tasks. While time and computational resources can be theoretically infinite, the amount of native network tokens may not be. So while theoretically these networks are Turing-complete, in practice they can at best be considered quasi-Turing-complete.

The distinction of Turing-complete and non-Turing complete is important to better understand the capabilities of the network and what could be built on the network. There are further nuances to Turing machines and Turing completeness, which the interested reader can read more about here.

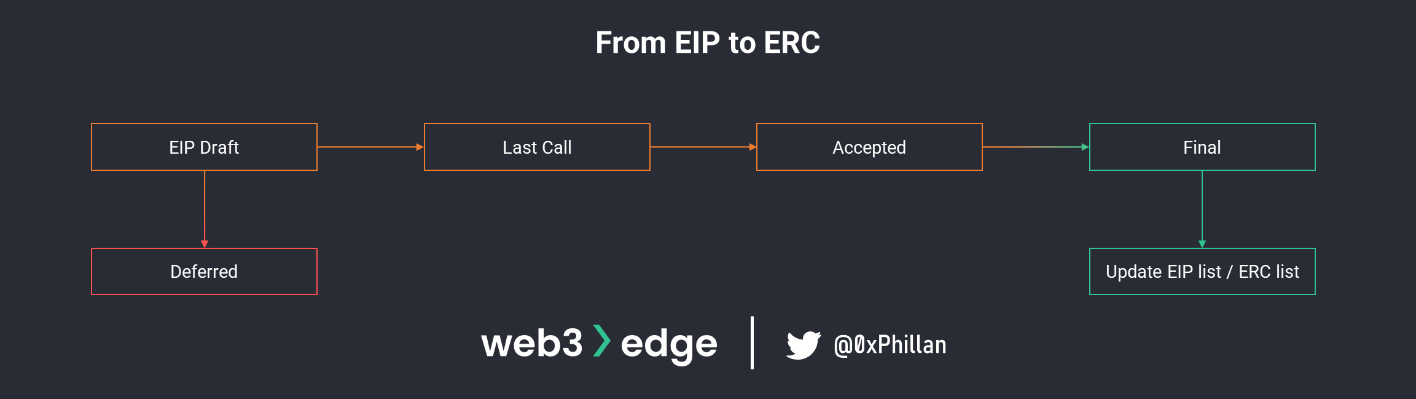

From EIPs to ERCs

ERC (Ethereum Request for Comment) refers to a technical coding standard used in the Ethereum blockchain. An ERC dictates a number of rules and actions that an Ethereum smart contract must follow and how to implement them.

An ERC, however, is already an agreed-upon standard that has been included in Ethereum documentation which developers agree to use. Before an ERC becomes an ERC, it starts as an EIP (Ethereum Improvement Proposal). EIPs are essentially very detailed forum posts in which users can argue, discuss and vote on changes to the Ethereum blockchain and ecosystem.

This system is very widely used across the Web3 ecosystem from networks (e.g., Bitcoin uses BIPs – Bitcoin Improvement Proposals) to dApps (e.g., AAVE uses AIPS – AAVE Improvement Proposals).

ERC Token Standards

ERC-based tokens live on the Ethereum network, but they are technically distinct from the Ethereum token, which is the native token of the Ethereum network. While the Ethereum token is defined as part of the network and is the underlying “currency” of the network to pay for transactions and smart contract execution in the form of gas fees, ERC-based tokens are defined in smart contracts.

The ERC-standard smart contracts define all parameters of the token and all its behaviors, and can be viewed online using etherscan.io or any other block explorer for EVM-compatible networks. A block explorer is a tool that allows you to view real-time and historical information stored on a blockchain. As a result of this standardization, the behavior of ERC-based tokens is predictable allowing for dApps and other smart contracts to interact with any smart contracts that use those standards.

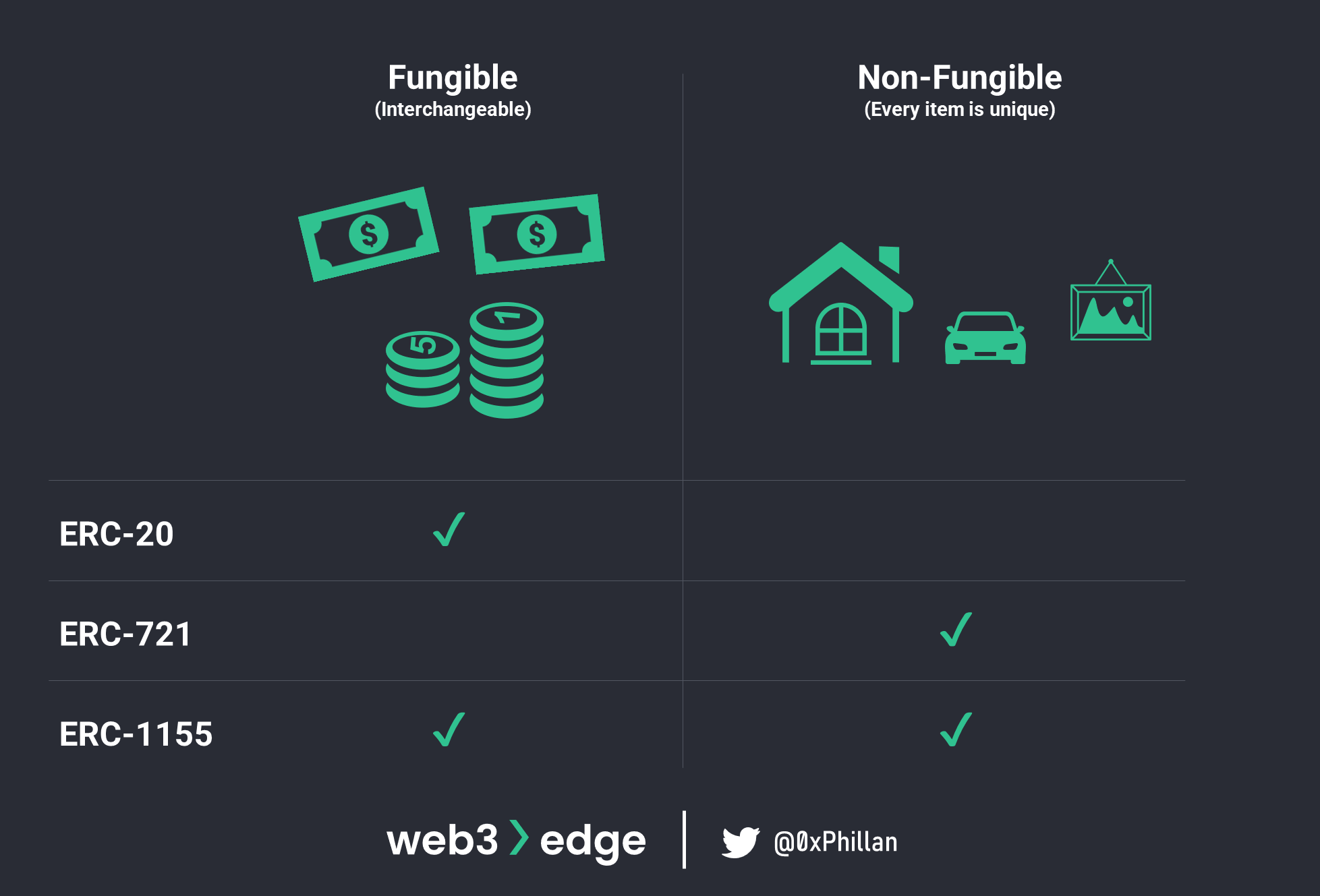

Below we cover ERC-20, ERC-721, ERC-1155 and ERC-4626 standards. The first three relate to creating fungible and non-fungible digital assets that live on blockchains, while ERC-4626 standardizes yield-bearing functionality applied to ERC-20s.

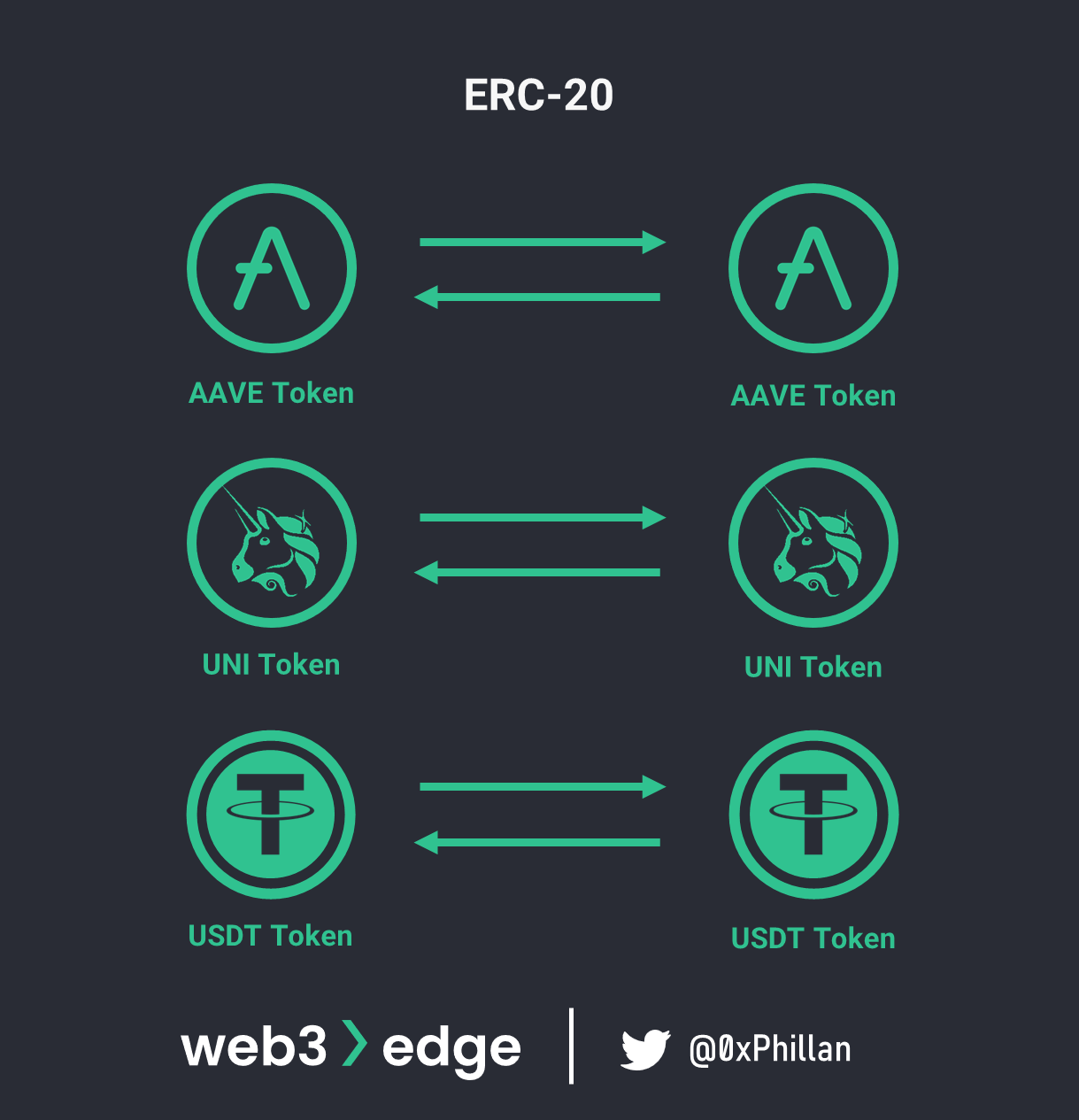

ERC-20 Tokens (Fungible Tokens)

ERC-20 is a standard for fungible tokens. Fungibility refers to the property of one asset to be interchangeable with another of the same asset, with both assets being indistinguishable from each other. For example, a one US dollar bill is fungible, because it can be exchanged with any other one US dollar bill.

The ERC-20 standard enables the creation of fungible tokens on EVM-compatible networks: The Curve token (CRV), the Uniswap token (UNI) or the AAVE token (AAVE) are examples of fungible tokens, but also digital representations of fiat currencies are ERC-20s, such as USDT or USDC, which are pegged to the US dollar.

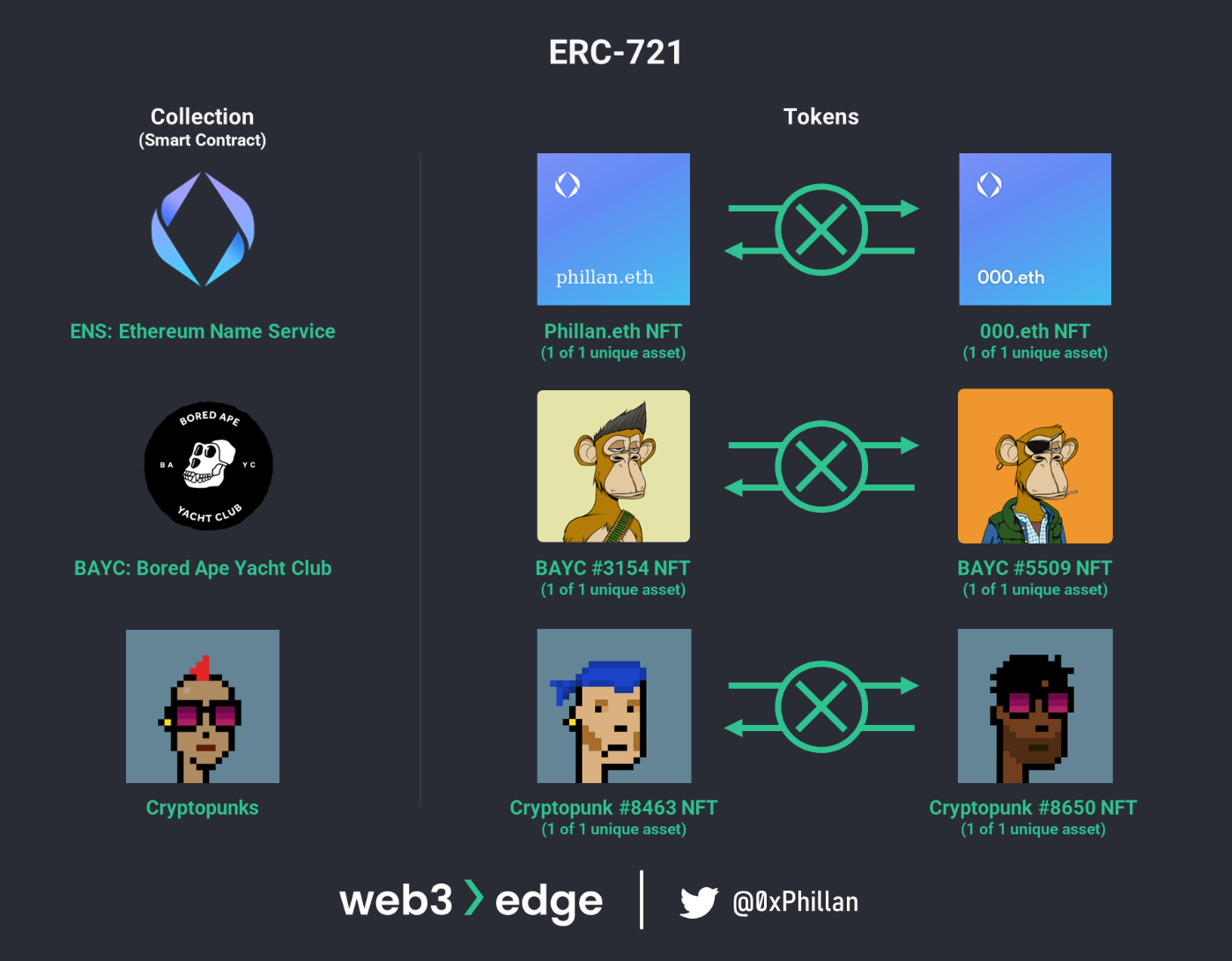

ERC-721 Tokens (Non-Fungible Tokens)

The ERC-721 standard defines non-fungible tokens (NFTs). The unique aspect of NFTs is already in the name: the tokens are non-fungible, which means that every token is unique. NFTs are an exciting development, because the content of each NFT can be whatever the creator wants it to be, from a profile picture to the deed to real estate or any other certificate. NFTs enable publicly verifiable digital ownership of any physical or unique digital asset.

Popular NFTs include Cryptopunks, Bored Ape Yacht Club and Ethereum Name Service (ENS).

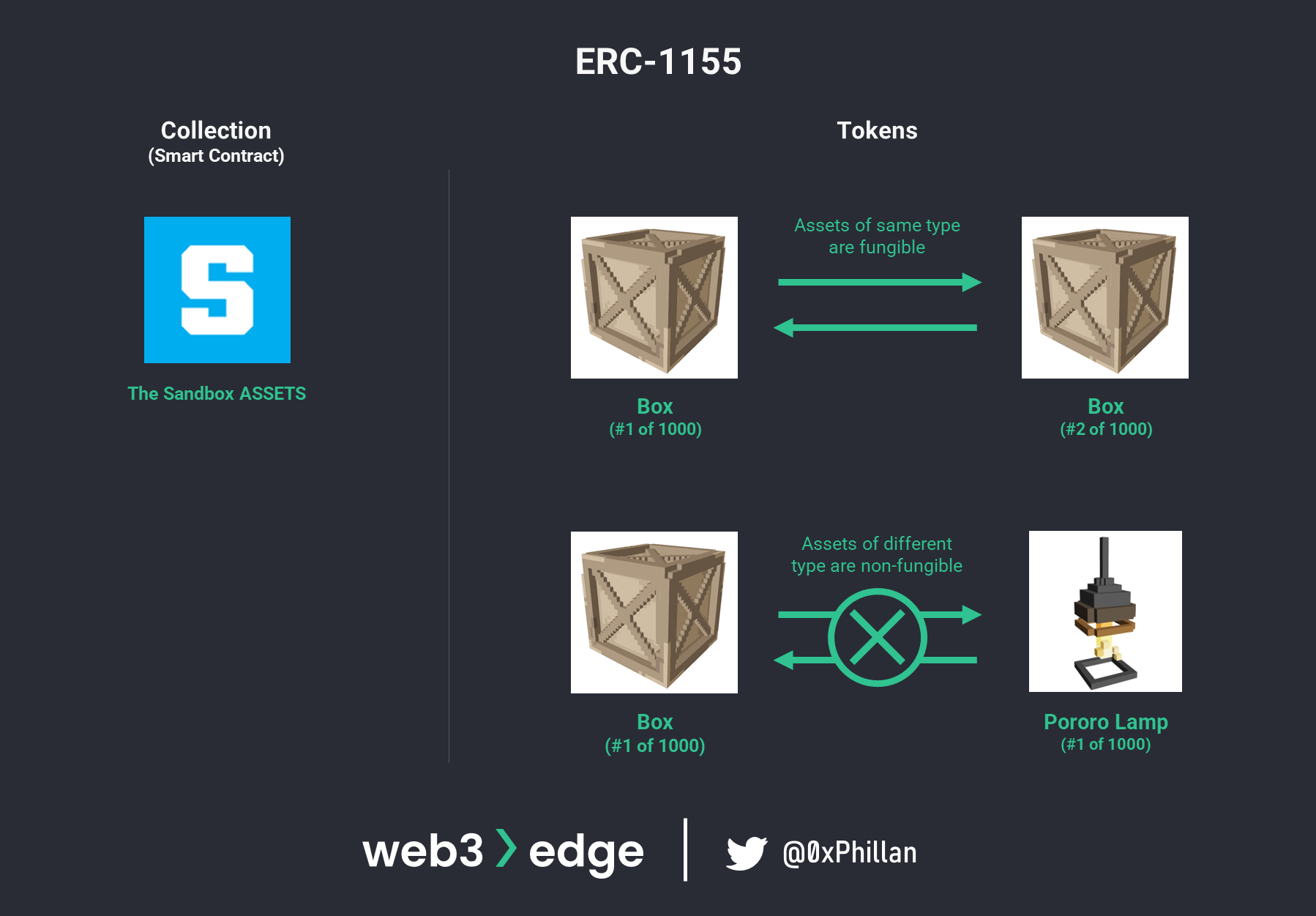

ERC-1155 (Multi-Tokens)

ERC-1155s are so-called “multi-tokens”: they combine the functionality of both ERC-20s (fungible tokens) and ERC-721s (non-fungible tokens). This means that apart from enabling new use-cases through multiple ‘unique’ fungible assets, e.g., a sword in a game (unique) with a supply of 100 (fungible), it is also possible to manage multiple token types within a single smart contract.

Consolidating these functions within a single smart contract creates efficiencies in terms of the space the smart contract uses in the EVM. This also creates simplicity for bigger and more complex projects, as multiple sets of tokens can be managed from a single smart contract.

Popular ERC-1155s include ENJIN NFTs, which use ERC-1155s to track in-game assets across a handful of blockchain-based games as well as ticketing applications that may need to regularly create large sets of unique assets as part of one contract. Examples of projects that use ERC-1155 include The Sandbox Metaverse, Fanz and Azure Heroes.

ERC-4626 (The Vault Standard)

ERC-4626 standardizes token vaults. A vault is a yield-bearing smart contract that accepts ERC-20 token deposits and provides token rewards (yield) of another token to the depositor. It is essentially a multi-sig asset management smart contract that generates tokens as a form of reward for deposits, which can later be redeemed for tokens that were initially deposited in the vault.

For example, xSushi is a yield-bearing token that can be redeemed for the SUSHI token (the governance token of the SushiSwap dApp), and essentially represents a user’s share of yield-generating activity in the Sushi DeFi protocol.

This token standard enables developers to accept any ERC-20 token without having to manually integrate every token manually and consider their specific design decisions. This reduces the risk of coding errors that could lead to loss of assets.

Yearn V3 was the first major protocol to use the ERC-4626 standard, with protocols such as Balancer and Rari Capital having started to implement the standard as well.

Blockchains vs. Directed Acyclic Graphs (DAGs)

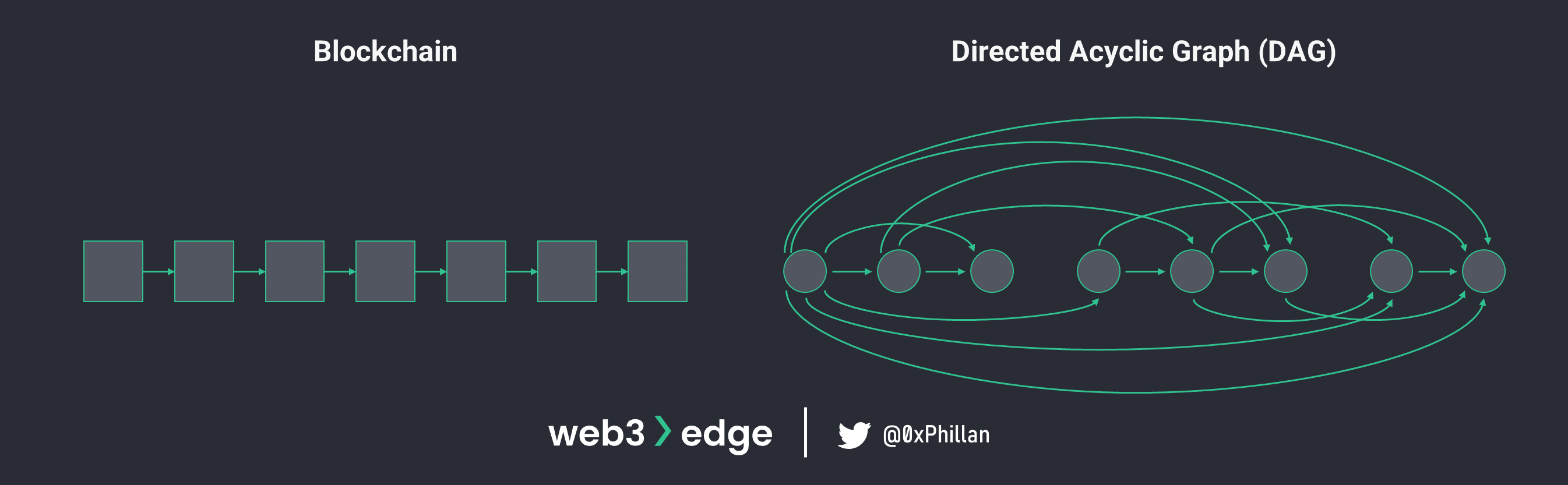

Directed Acyclic Graphs (DAGs) are a different approach to structuring data and some projects use them as an alternative approach to the blockchain shared ledger structure. Blockchains have transactions included in blocks and blocks are validated and chained in chronological order. The blockchain is replicated across all nodes on the network.

In DAGs, transactions are validated one by one, and every transaction is linked to the next. In order to validate a transaction, two more transactions dictated by the network must be validated as well. This leads to a web-like structure that can easily be expanded and allow for parallel computation of transactions, which can greatly increase throughput speeds. Since it is quite straightforward to validate transactions, miners play a very small role in this system: any user that interacts with the network can validate transactions of other users, which heavily reduces transaction costs.

The word Directed Acyclic Graph describes the structure quite well:

- Directed: the data structure can only move in one directed (adding new data)

- Acyclic: when moving along the directed path between data points, it is impossible to run into a previous data point (non-circular)

- Graph: a non-linear data structure consisting of nodes/vertices and edges (connections between the nodes)

While this structure introduces benefits in regards to transaction throughput, validation speed and transaction costs, DAGs face completely different challenges. While in theory this system allows for strong decentralization, a reduction in transaction can in theory lead to a reduction of network security: fewer transactions mean fewer randomized validators, which increases the likelihood of a single validator or set of validators controlling the majority of transactions. If a single entity controls a majority of network activity, it becomes easier to introduce malicious activity to the network.

To combat the above challenge, DAG-based networks have moved to centralized solutions: implement central coordinators that route to-be validated transactions, control ‘witness’ validators with elevated authority or straight up making the validation network private.

Despite these challenges, DAG networks fill an important niche in the Web3 ecosystem: they are slightly more centralized high throughput networks that can manage heavy transaction loads and will find more use-cases as mainstream adoption of Web3 progresses.

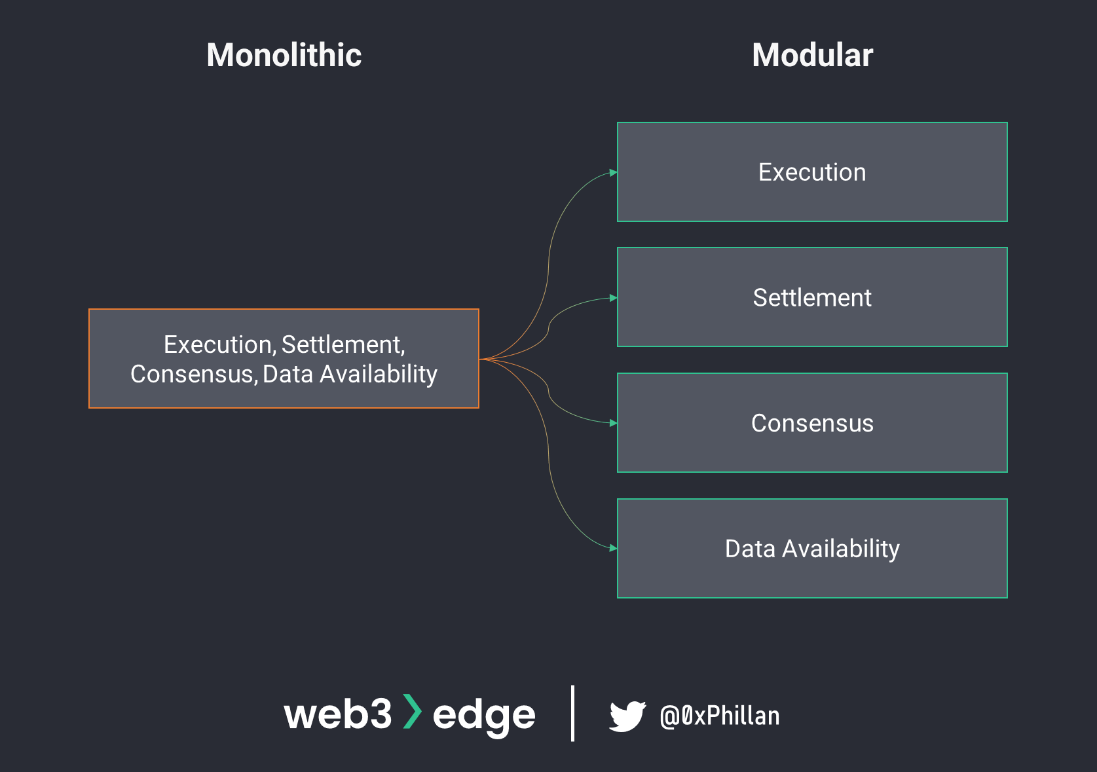

Monolithic vs. Modular Blockchains

Decentralized networks are complex systems that consist of various components which interoperate to create trustless and immutable networks. Networks such as Bitcoin, Ethereum, Solana, Polkadot and NEAR all considered monolithic blockchains – they are all networks that are “formed from a single piece” where any change to one component requires an update of the entire network. Modular blockchains take these various components and let them be swapped out for other components.

The various components of a modular blockchain system include:

- Execution layer: Transactions execution and smart contracts

- Settlement layer: Transaction validation, transaction settlement

- Consensus layer: Consensus mechanisms

- Data availability: Shared ledger

By splitting the system into multiple components, optimizations can be implemented for each component, increasing efficiency and security of each component. Layer2s, which are to be covered in detail in the next part of this series, can be considered a first step into modularity. Layer2s offload the execution layer and execute transactions and smart contracts on a separate network and feed the results back to the Layer1 monolithic network where settlement, consensus and the shared ledger are managed.

While there are many benefits to modularity, the modular system is only as strong as its weakest link. By having modular components there is potential for individual components to be targeted more easily. Furthermore, adding modularity to the network also introduces a new level of complexity, both in terms of ensuring the networks functions properly from a technical perspective, as well as from the perspective of the value of the network’s native token. If the settlement layer can be replaced with another that uses a different token, it would be challenging for a network to justify the existence of a token in the first place.

Despite these challenges, the concept of modular blockchains provides an exciting direction for new projects and new technologies to develop, that could help scale and grow the Web3 ecosystem. Popular modular blockchain projects include Celestia and Cosmos.

Summary – Layer1 Networks

Web3 is a vast concept, combining blockchains, cryptocurrency and the ecosystems built on them and related technology.

Bitcoin is the layer1 network that popularized decentralized blockchain technology, and Ethereum is the network that provided quasi Turing-complete computational functionality which enabled smart contracts. It was the idea of chaining blocks of data by hashing data from earlier blocks, combined with distributing copies of all stored data across many nodes, that enabled immutability and data permanence. Apart from these technical primitives, the node infrastructure had to be in place too for this to work: if there is only one node on the network, then the network is essentially centralized and faces centralization challenges: data can be altered, removed and access to it can be restricted by the node.

Apart from the underlying data structure, there is also the question of how nodes on the network know whether data that is being provided to them is correct. This is summarized as the “Byzantine General’s Problem”. Bitcoin solved this problem through its Proof-of-Work consensus algorithm, which requires nodes on the network to solve computationally heavy cryptographic puzzles to prove that they have done the validation work required to validate a block. Alternative consensus algorithms exist, such as Proof-of-Stake, which require far less energy and are considered better for the environment.

Bitcoin and Ethereum, two of the most popular blockchain networks at time of writing, use vastly different bookkeeping models: Bitcoin uses the UTXO model, while Ethereum uses the Account model. The UTXO model can be considered a “verification system”, with every UTXO being a receipt of a transaction. The Account model more closely resembles a database of accounts and balances that is updated with each new block that is added to the blockchain.

Ethereum’s computing component is called the “Ethereum Virtual Machine” which allows for the execution of smart contracts. Smart contracts are applications that are stored on decentralized blockchain networks that can be executed autonomously based on programmable trigger criteria. Depending which blockchain you are using, smart contracts may be written in Solidity, Rust or other programming languages.

Standardization of smart contracts was necessary, to achieve better interoperability between smart contracts. ERCs are coding standards that have been solidified in the Ethereum documentation and are EIPs “that made it”. EIPs are proposals that anybody in the Ethereum ecosystem can make, and is open for anybody to view, discuss and vote on. If an EIP is voted in, the proposed changes are applied to the network. The four most popular ERC token standards are ERC-20s (fungible tokens), ERC-721s (non-fungible tokens, or “NFTs”), ERC-1155s (multi-tokens) and ERC-4626 (the vault standard).

While blockchains have been the most popular ledger format for Web3 decentralized networks, alternative formats have emerged as existing structures are adjusted for specific use cases. A directed acyclic graphs (DAG) is one example of such an alternative structure which relies on validating transactions instead of full blocks. Modular networks are an extension of the idea that we need to rethink existing structures. Modular networks aim to break decentralized networks into different functional layers that can each be optimized individually.

Closing

This is it for the first part of the Master Web3 Fundamentals series, thank you for reading! If you enjoyed this piece, please consider sharing! If you have any feedback about this piece or want to discuss its contents, reach out to @0xPhillan on Twitter.

If you want to be among the first to read the second part when it’s released, or want uncompromisingly thorough perspectives on recent events in Web3, subscribe to the Web3edge Newsletter and follow @Web3edge_io on Twitter!

This piece was written by 0xPhillan for Web3edge – follow @0xPhillan on Twitter!

SHARE THIS PIECE

Related content

In the first piece of this series, Mastering Web3 Fundamentals: From Node to Network, we covered key concepts around the node layer and layer1 networks, ...

The last few weeks the news cycle has been dominated with the collapse of the FTX exchange, FTX US exchange and Alameda Research. Sam Bankman-Fried (SBF) filed ...